Vol 8, No 1 (2024)

https://doi.org/10.7577/njcie.5736

Article

High School Teachers’ Adoption of Generative AI: Antecedents of Instructional AI Utility in the Early Stages of School-Specific Chatbot Implementation

Eyvind Elstad

University of Oslo

Email: eyvind.elstad@ils.uio.no

Harald Eriksen

Oslo Metropolitan University

Email: harald.eriksen@oslomet.no

Abstract

In 2023, the breakthrough of generative artificial intelligence (AI) led to its adoption. While some teachers expressed frustration over pupil misuse of generative AI, others advocated for the availability of a school-relevant chatbot for pupil use. In October 2023, a local chatbot intended to meet that goal was launched by Oslo Municipality. After six weeks, an investigation was conducted to examine how 236 teachers perceived the opportunities and limitations of this new technology. The examination used structural equation modelling to explore antecedents of instructional AI utility. The analysis shows that the pathway between instructional self-efficacy and AI utility has the highest positively charged value, while the pathways between management and AI utility have low numerical value. This last finding can be interpreted as the influence of an untapped management potential and must be seen in context of the fact that no guidelines for the use of AI in schools existed when the survey was conducted. In addition, the pathway between colleague discussion and AI utility has relatively low numerical values. The potential for learning through discussion among colleagues can be utilized to an even greater degree. The pathway between management and colleague discussion is remarkable. Implications and limitations are discussed.

Keywords: generative AI, chatbot, teachers, Norway, high school, instructional AI utility

Introduction

While artificial intelligence (AI) is hardly a new phenomenon, 2023 witnessed a breakthrough for ChatGPT and other chatbots known as generative AI. This development has created both enthusiasm and concern for education professionals (Dell’Acqua et al., 2023; Farrokhnia et al., 2023; Fischer, 2023; Pea et al., 2022). In today’s technology-driven world, generative AI is already in the process of transforming several areas of society (Acemoglu & Lensman, 2023), and experimental evidence from business has shown its positive effects on productivity (Noys & Zhang, 2023). After it was launched in late 2022, AI has been adopted to varying degrees in schools, leading to an increased focus on the need for developing AI literacy. AI literacy among teachers refers to the understanding and competency teachers have in relation to AI (Ng et al., 2021). For teachers, generative AI has posed the following question: how should they develop instructional AI literacy to keep up with rapid technological evolution? These advances present challenges for teachers worldwide but also open up new opportunities. In the early stages of school-specific chatbot implementation, several individual and organizational antecedents can influence the utility or effectiveness of instructional AI. In the context of instructional AI implementation in schools, organizational antecedents refer to the specific factors within the school environment that can influence the utility or effectiveness of using chatbots for instructional purposes. An individual antecedent refers to a personal trait that might influence a person's behavior or action. The readiness of a school and its staff to embrace instructional AI plays a possible role. While some have embraced the advancement of AI in educational settings, there is a necessary degree of skepticism surrounding its implementation and the potential drawbacks associated with its widespread use (Nagelhout, 2023). Rather than opting for an outright prohibition of AI in classrooms or allowing its unrestricted integration across all learning domains, several educators recognize the need for a balanced approach (Cao & Dede, 2023). They acknowledge that AI tools should serve to assist pupils in their cognitive processes rather than taking over the learning experience. It is crucial to prioritize the learning journey over final outcomes, giving due importance to learner agency, leveraging various sources of motivation, nurturing skills that AI struggles to replicate easily, and promoting intelligence augmentation by establishing effective human–AI partnerships (Cao & Dede, 2023), preferably as part of an ecosystem that also includes adaptive learning technology (Moltudal et al., 2020; Moltudal et al., 2023) and cultures of participation (Pagano et al., 2023). Encouraging collaboration and communication among teachers can enhance the utility of instructional improvements (Bryk & Schneider, 2002). We think the same can apply to chatbot implementation in schools. Regular meetings, feedback sessions, and forums for sharing good practices can help identify areas for improvement and promote innovative uses of chatbots.

Several studies have discussed how generative AI can be used in teaching individual subjects: geography (Chang & Kidman, 2023), media education (Pavlik, 2023), computer science (Yilmaz & Yilmaz, 2023), interdisciplinary learning (Zhong et al., 2023), and second language learning (Kohnke, 2023). Several study books are published (Elstad, 2023; Krumsvik, 2023). Overviews of research on educational AI have taken several directions (AlBadarin et al., 2023; Bahroun et al., 2023; Baytak, 2023; Celik et al., 2022; Holmes & Tuomi, 2022; Hopfenbeck et al., 2023; Kuhail et al., 2023; Lo, 2023). Dystopian considerations about what generative AI will mean for their work situations have also been communicated (e.g., Buxrud, 2023; Osvold, 2023). This is particularly true in the context of pupils who submit AI-generated texts for teachers to assess (Chaudhry et al., 2023; Cotton et al., 2024; Sullivan et al., 2023; Susnjak, 2022). However, the new AI technology has also created enthusiastic expectations from tech-savvy avant-gardists (Adiguzel et al., 2023; Baidoo-Anu & Ansah, 2023; Zhai, 2023). Educators and policymakers are particularly concerned about issues such as misinformation, safeguarding data privacy, and the risk of AI ‘thinking’ replacing traditional human learning methods.

Teachers typically exhibit one of three stances when it comes to embracing new technology: some resist incorporating it into their teaching, others exhibit enthusiasm and a drive to discover viable applications for the innovation, and a third group assume a cautious ‘wait-and-see’ approach (Elstad, 2016). Although generative AI was not specifically designed for educational contexts, schools are now tasked with integrating this new technology into the classroom.

Reporting from a survey conducted 6-8 weeks after Oslo Municipality, the largest in Norway, unveiled a chatbot for use in schools, the purpose of this article is to explore the antecedents of instructional AI utility in the early stages of school-specific chatbot implementation. The research questions that the study aims to address are:

1. How have teachers adopted the new generative AI technology (in this case, the chatbot) into their instructional practices? To what extent do they find the technology useful for educational purposes?

2. How does teachers’ belief in their own ability to effectively incorporate AI into their teaching (instructional self-efficacy) relate to their perceived instructional AI utility?

3. What is the role of school management in the successful integration of AI into teaching and learning processes?

4. How do discussions among teachers about the AI technology affect their potential use of it? Are these discussions contributing positively to increasing teacher efficacy and perceived utility of AI?

Theoretical framework

The theoretical framework employed in the present study comprises different theory groups to explore the response to the introduction of secure chatbots in schools: How national and local educational authorities and managers can incentivize teachers to tackle chatbot integration. At the core of these discussions, we lean on the theories of initiation, implementation, and institutionalization. Next, we must consider the broad spectrum of teacher reactions, which could range from resistance at one end to enthusiasm at the other and even indifference in the middle, and the potential for social learning through dialogue among the staff is emphasized. We dissect our multifaceted theoretical framework and the hypotheses it has led us to formulate.

The endogenous variable in our theoretical model is high school teachers’ instructional AI utility, for example, how they all pertain to the perceived effectiveness and assistance that AI provides to teachers in different aspects of their professional responsibilities, particularly in decision-making, insights for student learning, and lesson planning. AI is here being viewed as a tool that enhances a teacher's ability to perform their job more effectively and efficiently. Instructional AI utility among teachers refers to the extent to which AI-based tools and systems can effectively support and enhance instructional practices in the classroom. It encompasses the ability of AI technology to improve teaching methods, facilitate personalized learning experiences, provide actionable feedback, and contribute to positive pupil outcomes. The effectiveness of instructional AI depends on several factors, including its ability to accurately analyze and interpret pupil data, adapt content and strategies to individual needs, and engage pupils in meaningful learning experiences.

We believe that self-efficacy plays a crucial role in shaping teachers’ attitudes (Tschannen-Moran et al., 1998) towards and beliefs about AI, as it can influence their motivation, confidence, and willingness to adopt and use AI tools in the classroom. Teachers’ AI instructional efficacy is seen an individual antecedent of instructional AI utility that refers to teachers’ beliefs in their capability to effectively use AI tools for pedagogical purposes and is an important factor influencing teachers’ perceptions of the usefulness of AI in teaching. When teachers feel competent and confident in their ability to use instructional AI, they are more likely to perceive it as useful for their teaching practices. Teachers with greater self-efficacy are more likely to engage in exploratory behavior (Holzberger et al., 2013) and actively seek opportunities to integrate AI into their teaching practices. Teachers may also perceive digital tools as challenging or even overwhelming and doubt their own ability to effectively integrate them into the classroom (Klassen & Chiu, 2010). Consequently, hypothesis no. 1 is ‘Teachers’ AI instructional self-efficacy is positively related to their instructional AI utility’. This hypothesis, which is based on an analogical reasoning from previous research on teachers (Holzberger et al., 2013), suggests that there is a relationship between teachers' self-perceived competence in using AI for instructional purposes (instructional AI efficacy) and their perceived usefulness and effectiveness of AI in teaching (instructional AI utility).

Encouraging and supporting teachers to work together within professional communities to explore the use of AI can lead to more effective integration of AI in schools, better responses to challenges, and better overall educational outcomes (Fernández-Batanero et al., 2022). It is also important to provide teachers with the necessary resources, time, and professional development opportunities to effectively collaborate and learn. Discussions among school professionals are essential for investigating the usefulness of AI tools as they facilitate sharing experiences, building knowledge and expertise, solving problems, reflecting on pedagogical practices, and enhancing implementation and effectiveness. Norwegian education authorities encourage teachers to find answers to the challenges posed by AI through professional learning communities (The Norwegian Directorate for Education and Training, 2023). These discussions might empower teachers to make informed decisions about the integration of AI tools and promote continuous improvement in leveraging AI for instructional purposes. In the light of social learning theory (Bryk & Schneider, 2002), we acknowledge that a conducive environment incentivizes employees to learn, cooperate, and foster innovation. This is also the message from the local authorities: “Leaders and employees in the Oslo school system should set aside time to experiment with, explore, and become familiar with AI, so that we can evaluate how AI can be used to create the best possible learning outcomes in teaching” (Osloskolen, 2024). However, resistance may emerge if staff members feel threatened by a new technology or believe it contradicts their values, like maintaining academic integrity. Furthermore, the discussions are thought to encourage ongoing enhancement of their skills in using AI for instructional purposes. Consequently, the result of these collaborative discussions is believed to foster the development of teachers' AI utility, meaning their practical application of AI in education becomes more effective and purposeful. Professional communities facilitate networking, knowledge sharing, professional development, peer support, and access to valuable resources to help teachers navigate AI integration and enhance their instructional practices. Hypothesis no. 2 posits that when teachers engage in discussions with each other about AI tools and their use in education, these interactions enhance their ability to make knowledgeable decisions about how to incorporate these tools into their teaching.

Overall, our framework suggests that teachers’ interactions and perceptions of a professional community within the school may positively correlate with how useful they see chatbots in their teaching. Healthy relationships among colleagues could influence teachers’ acceptance of AI technology. Ultimately, this in-depth theoretical examination will guide us through the uncharted territory of integrating secure chatbots into a school environment. Expectancy theory (Lawler & Suttle, 1973) supports the notion that it is essential for managers to be clear about the goals and the expectations placed on individual employees (Camphuijsen, 2021). This theory posits that individuals are motivated to exert effort when they believe it will contribute to desired outcomes. Hence, when managers articulate clear expectations, they forge a direct connection between employees’ efforts and the organization’s objectives. A clear understanding of these expectations enables employees to tailor their actions to fulfil these goals, providing them with a trajectory, giving lucidity about their roles, and elucidating how their input propels the organization forward. This pivotal role of managers comes with an imperative to present ‘clear management’, a term frequently spotlighted in job listings for Norwegian principals. It is important to recognize that each school is home to a cohort of managers that collectively form the management team. Managers’ clearly expressed goals denotes effective communication between teachers and managers. Clear and concise communication from a school’s managers clarify expectations, indicating a desire for clarity and alignment in work-related tasks. To effectively communicate their priorities, managers should convey a sense of understanding and shared objectives. Based on this, our hypothesis no. 3 is that teachers’ perceptions of clear communication from this management group will be positively related with their instructional AI utility.

In addition to our three main hypotheses, we also explore how instructional AI efficacy is related to management and discussion among colleagues. Here, we assume that at its best, effective management could strengthen teachers' instructional efficacy. We also assume that management could lay a foundation for discussions among colleagues and thus be positively related to colleague discussion. Further, we are exploring how the strength of colleague discussion is related to individual teachers' instructional AI efficacy. Once again, we assume that the relationship between colleague discussion and teacher efficacy is positive.

Educational context

Norwegian teachers have a large degree of autonomy to exercise professional discretion in their work (Larsen et al., 2021). Thus, they have scope to influence how they relate to AI. Norwegian educational authorities have chosen a strategy that involves delegating the responsibility for making generative AI available to Norwegian counties and municipalities for public schools and to private schools in a way that safeguards privacy concerns and developing teachers’ competence in using AI (Ministry of Education and Research and Association of Municipalities, 2023). Schools have widely different guidelines for how teachers should deal with pupil-submitted texts that may have been created by AI (Buxrud, 2023).

The local education authority, Oslo Municipality, developed a chatbot in compliance with relevant privacy regulations in the fall of 2023. The divisional director for service development, digitization, and analysis in Oslo Municipality said:

We are committed to gaining first-hand experience with AI, and knowledgeable educators will assist us in this endeavor. Our current focus is on the cautious introduction of the safe use of ChatGPT in high schools. We are proceeding carefully as we aim to accumulate valuable experience. To support this initiative, we have assembled a team of AI mentors (Ingebretsen, 2023, August 15).

From October 2023, all high school teachers could log into and use the school-specific chatbot, with the survey carried out 6–8 weeks later. Preceding this, both students and teachers had access to chatbots in the educational context through their connection to the Internet. The local school authorities' strategy in the first stage after the municipal chatbot is launched is a low-key policy. However, the issue of the launch of the chatbot was discussed with the teachers' unions in December 2023 to gain acceptance for a cautious trial (Lien, 2024, January 8). National education authorities have created uncertainty about the significance of generative AI in connection with assessment. Which internet pages the pupils will have access to during the exam has been decided in February 2024. Further, in the spring of 2024, high schools will have the opportunity to try out long-term projects as an alternative to the traditional exam forms. In this connection, generative AI becomes a possible tool for pupils. When this article is written there is no unified approach to generative AI in Norwegian education. Without national rules, schools may develop inconsistent standards for what is considered acceptable use of AI in pupil work, which can lead to confusion among both pupils and educators about what constitutes plagiarism or academic dishonesty. In the absence of guidelines, teachers are left to make individual judgments about AI-generated content, which may lead to subjective evaluation and potential conflicts between pupils, teachers, and managers.

This situation contributes to the current confusion about how schools should meet generative AI. The empirical results presented therefore depict a situational picture in the early stages of school-specific chatbot implementation.

Material and method

We used our judgment to select participants from schools who are most informative for the study's purpose. We invited 5 high schools in Oslo to participate in a survey approximately 6–8 weeks after the local chatbot was launched. To encourage participation, one of the authors offered to give a lecture on generative AI in schools to teachers as a reciprocal gesture. There is considerable diversity among these 5 high schools in Oslo in terms of the socio-economic levels and ethnic backgrounds of the pupils. The schools included in the survey cover the full range of diversity among Oslo schools, as reflected in admission scores and average grades when pupils leave school. All schools offer a program for academic studies. The sample was therefore based on a strategic choice of participating schools that reflects the diversity among high schools in Oslo which prepare for academic studies. The survey was carried out by having teachers complete a paper-based questionnaire during a mandatory school meeting. However, participation in the survey was voluntary, but no teachers declined to participate. Participants were informed about the project and assured that they could withdraw from the study at any time without giving any reason. Observation did not reveal that any 246 teachers avoided submitting their completed questionnaires.[1] However, 10 completed questionnaires were deleted. Therefore, the response rate was about 96% among the teachers at the five schools. Teachers who were absent due to illness at the time of the survey are not represented in the data. It is reasonable to assume that illness was randomly distributed among school staff, so their absence should not suggest any potential bias. We believe that the sample on which the survey is based reflects well the diversity of teachers in high schools in Oslo offering an academic program.

By using structural equation modelling (SEM) in a study on the antecedents of instructional AI utility during the early stages of school-specific chatbot implementation, we can gain insights into the underlying mechanisms and relationships among variables (Kline, 2023). Antecedents refer here to the variables that precede and potentially influence instructional AI utility. These variables are hypothesized to affect the outcome and are used to understand and explain variability in the endogenous variable. Those insights, in turn, can inform strategies for the successful integration of AI technologies in educational settings and help identify factors critical to their acceptance and effectiveness. SEM is a statistical methodology that allows researchers to examine complex relationships between observed and latent variables. SEM takes into account measurement error, which provides more accurate estimates of the relationships between variables. This is especially important when relying on survey data, which can be prone to such errors.

The items in the survey were partially adapted from indicators obtained from other scholars’ research (e.g., the Use of Technology developed by Venkatesh et al. (2012] and adapted by Strzelecki [2023], partly from indicators we have used in other studies, and partly from indicators that were newly developed (Haladyna & Rodriguez, 2013).

Results

In the survey, the teachers responded to items on a five-point Likert scale, with three representing a neutral midpoint. We used the following Likert-type scale: 1 = completely agree; 2 = somewhat agree; 3 = neither agree nor disagree; 4 = somewhat disagree; 5 = completely disagree. The exemption was item 93 and 95: we used: 1 = completely certain; 2 = fairly certain; 3 = neither certain nor uncertain; 4 = fairly uncertain; 5 = very uncertain. The concepts were measured with two to three single items. Descriptive data (table 1) and the measurement and structural models (figure 1) were estimated using IBM SPSS and IBM SPSS Amos 28.

Table 1. Descriptive statistics and Cronbach’s alpha for the indicators (N = 236). SD means standard deviation. Item names are abbreviated to their numbers for clarity.

|

Item |

Text |

Min |

Max |

Mean |

SD |

|

|

Alpha |

|

Instructional AI utility (adapted from Venkatesh et al. (2012) and Strzelecki (2023)) |

0.80 |

|||||||

|

5

|

Artificial intelligence makes it easier for me to make decisions about matters related to teaching |

1 |

5 |

3.56 |

1.044 |

|

|

|

|

6 |

Artificial intelligence can give me insights into what the pupils should be able to do when I teach specific academic content |

1 |

5 |

3.35 |

1.173 |

|

|

|

|

7 |

Artificial intelligence helps relieve the work of planning lessons |

1 |

5 |

3.50 |

1.090 |

|

|

|

|

Instructional AI efficacy (adapted from Skaalvik & Skaalvik, 2007) |

0.93 |

|||||||

|

|

How sure are you that you: |

|

|

|

|

|

|

|

|

W93 |

could motivate pupils to use artificial intelligence in a way that promotes learning? |

1 |

5 |

2.78 |

0.93 |

|

|

|

|

W95 |

could motivate pupils to use artificial intelligence as a tool to improve their writing skills? |

1 |

5 |

2.77 |

0.91 |

|

|

|

|

Management to the achievement of desired purposes |

0.86 |

|||||||

|

67 |

Communication with managers helps me understand what is expected of me. |

1 |

5 |

2.21 |

0.91 |

|

|

|

|

68 |

The managers succeed in communicating clearly what is important to them. |

1 |

5 |

2.25 |

0.89 |

|

|

|

|

69 |

Communication from managers is generally very clear. |

1 |

5 |

2.24 |

1.02 |

|

|

|

|

Discussion with colleagues (adapted from Bryk and Schneider, 2002) |

0.80 |

|||||||

|

29

30 |

Discussing how to stimulate pupils’ learning processes with colleagues makes me a better teacher. Discussions with colleagues provide insights into how I can improve my teaching. |

1 1 |

5 5 |

1.49 1.43 |

0.71 0.67 |

|

|

|

|

31 |

Discussions with colleagues give me useful input on how artificial intelligence can be used in teaching. |

1 |

5 |

1.84 |

0.86 |

|

|

|

The results of the descriptive statistics indicate that the teachers in sum have a neutral perception of the utility of AI (M = 3.4, SD = 1.1). Moreover, they report of a positive perception of their self-efficacy for using AI (M = 2.8, SD = .92), a positive perception of management to the achievement of desired purposes (M = 2.2, SD = .94) and a clearly positive perception of the value of discussion with colleagues (M = 1.6, SD = .75).

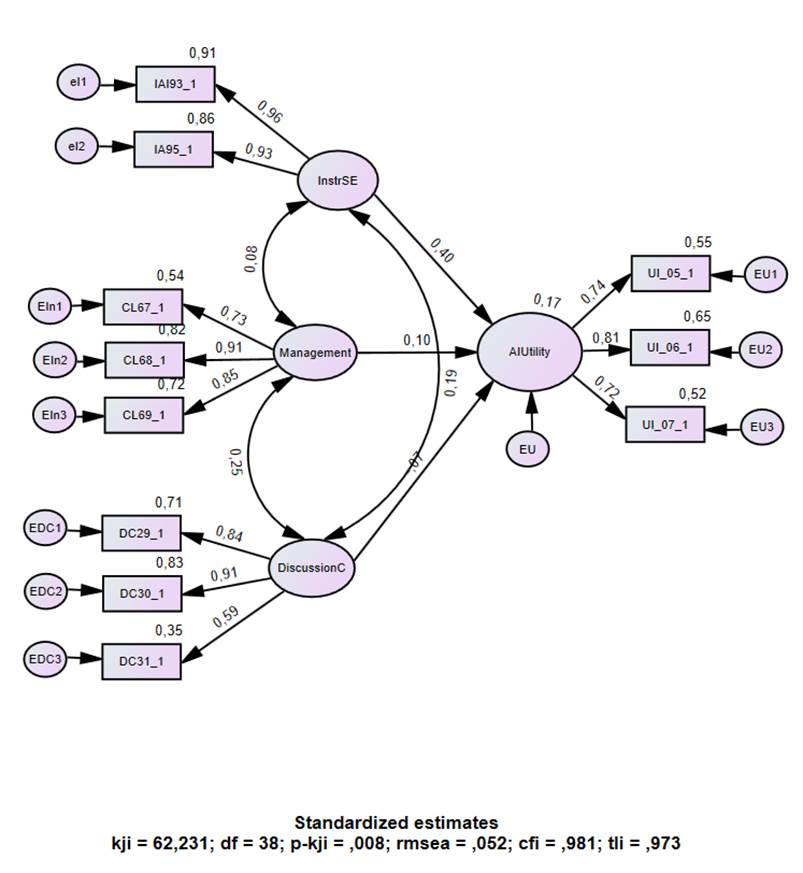

The SEM results are presented in Figure 1.

Figure 1. Structural equation model (SEM) with abbreviations: Instructional self-efficacy (InstrSE), Management, Discussion with colleagues (DiscussionC) Instructional AI utility (AIutility).

Our SEM analysis revealed that the proposed model demonstrated an acceptable fit to the data: the chi-square (kji) indicates a reasonable fit between the hypothesized model and the observed data. The comparative fit index (cfi) exceeded the acceptable threshold with a value of 0.95, suggesting that the model is a good representation of the real data structure. The Tucker-Lewis index (tli) also indicated a good model fit, with a value of 0.973, and the root mean square error of approximation value (rmsea) was 0.052, which is below the recommended cut-off (Kline, 2023), further confirming the model’s adequacy. Collectively, these indices support the conclusion that our model provides a good fit to the data.

Pathways represent the strength and direction of the relationship between variables. These coefficients are standardized estimates like beta weights in regression analysis. A value above zero indicates a positive relationship (as one variable increases, so does the other), while a value below zero indicates a negative relationship (as one variable increases, the other decreases). Here, we consider the practical or substantive significance of the pathways, which involves assessing whether the observed relationships are meaningful in the real-world context.

The analysis shows that the pathway between instructional AI efficacy and AI utility has the highest positive value (0.40), while the pathways between management to the achievement of desired purposes and usefulness and between colleague discussion and usefulness have relatively low numerical values. Low pathways indicate a weak relationship between the endogenous variable and an exogenous variable. This suggests that the exogenous variable does not explain much of the variance in the endogenous variable and implies that there is no substantial evidence to suggest that the exogenous variable has an important influence on the outcome variable.

Discussion

The pathway with the strongest relation to AI utility in teachers is teacher AI efficacy, with a coefficient of 0.40. This suggests that individual factors have a greater influence than organizational factors in determining the effectiveness of AI. This finding is not unexpected since the survey was conducted shortly after Oslo municipality version of the chatbot was launched. Considering that teachers typically carry out their primary task of teaching independently, it makes sense that their own beliefs and attitudes towards AI would have a significant impact. The traditional structure of the teaching profession is characterized by what is referred to as structural individualism, where teachers work in isolation in their own classrooms (Lortie, 2020).

Collaborative effort might be useful when addressing the challenges of AI in education. We find a positive pathway between discussion with colleagues and teacher efficacy (0.19). Norwegian education authorities believe that teachers must find solutions in ‘professional communities’ (The Norwegian Directorate for Education and Training, 2023) to the opportunities and challenges that generative AI creates in schools. How can this idea be justified? Professional communities bring together diverse perspectives and experiences; they provide peer support and give teachers a safe space to share their challenges, frustrations, and victories. By collaborating, teachers can benefit from one another’s experiences and feedback, generating creative and effective solutions that individual teachers may not arrive at alone. Through professional communities, the responsibility is shared, which can lead to a more comprehensive understanding and approach to tackle these obstacles. As generative AI continues to evolve, professional communities provide a platform for ongoing learning and professional development. Further, by working together in professional communities, teachers can help create a shared understanding and standardization of the use of AI in education. Our findings indicate a positive relationship between colleague discussion and teacher efficacy. But the relationship between colleague discussion and AI utility is weak and it is slightly negative (-.07). Further research is needed to understand this relation better.

The results reported above must be understood against the background that no guidelines have been formulated for schools’ use of AI at either the national or the local level. The principals have thus not received clear signals about the kinds of goals to which they should lead their schools. On the other hand, the pathway between management to the achievement of desired purposes and colleague discussion is substantial (0.25). This pathway can be interpreted as meaningful relations: the subjective benefit that teachers see using AI in teaching depends primarily on their instructional AI efficacy and their collegial discussions. Management to the achievement of desired purposes may first appear to have a modest direct effect, but it may appear to have an indirect influence by creating room for discussion among colleagues.

The strategy of the local education authority was to relieve some teachers from part of their duties to lead educational development in AI in schools and serve as their schools’ internal drivers for AI. A possible challenge is that such a strategy does not work effectively when the messages from these AI mentors were so far not related to the managers’ instructional leadership. When the pathway from management to AI utility is quite low in numerical value, it can be interpreted as the significance of the social influence process whereby intentional influence is exerted by school managers and mentors “to structure activities and relationships” (Bush & Glover, 2014, p. 554) having an untapped potential. The concept of distributed leadership is relevant. Distributed leadership involves spreading leadership roles and influence throughout an organization, rather than concentrating them in one or a few top positions. Despite its popularity, there are concerns about its implementation and potential to blur the power relationship between leaders and followers (Bush & Glover, 2014). How leadership on implementing AI should be shared in high schools is an unsolved research task and belongs to future research.

The untapped potential must be seen in context of the fact that both national and local education authorities' guidelines for the use of AI in schools did not exist when the survey was conducted. There is reason to assume that teachers will gain experience and reach a new stage: as they explore and seek to understand how AI works and what it can do. This may involve attending school-based workshops, viewing webinars, or experimenting with AI tools like school-specific chatbots on their own. Over time, teachers might reach a more mature understanding based on a broader base of experience: teachers evaluate the relevance of the AI tools to their curricula and teaching methods. This stage involves considering AI’s benefits and drawbacks and any potential influences on pupil learning and engagement. Willing teachers begin to incorporate AI tools into their teaching on a trial basis to assess their practicality and effectiveness. Success at this stage can lead to the broader adoption and routine use of AI resources in the classroom. After using AI tools over time, teachers will reflect on how these resources have affected their teaching and their pupils’ learning. This can strengthen teacher efficacy. They may adjust their instructional strategies based on these insights.

The pathway from discussion with colleagues to AI utility also has a low numerical value. This can be interpreted as the potential for learning through discussion among colleagues can also be utilized to an even greater degree. Whether these assumptions are valid conclusions can be investigated when managers and teachers have better familiarized themselves with the opportunities and limitations of using instructional AI. Learners and teachers can gradually become aware of generative AI technology and its potential applications in education. Learners can use chatbots as a tool in their learning efforts or can avoid effort and strain by simply copying the texts that the chatbot produces. Teachers start exploring how they can use generative AI. This survey was completed at an early stage of AI implementation in Oslo municipality but approximately one year after it was launched internationally.

The data presented in this study are largely based on an analysis of the questionnaire survey with multiple choice questions and ratings, but teachers also answered open-ended questions. Teachers might express opposition towards the focus and integration of AI in the classroom, which implies a belief that emphasizing AI in education may divert attention and resources away from other important aspects of teaching and learning. One viewpoint is that the pupils’ academic understanding and performance without the use of aids, including AI, should be the primary basis for grading and assessing their knowledge. This underscores the importance of pupils’ independent thinking, comprehension, and application of knowledge without relying heavily on technological tools. An example ran as follows:

I don’t see why I ... should embrace Oslo Municipality’s chatbot. It’s a Catch-22 situation. It’s possible that pupils can learn something by using it, but the temptation to cheat and skip the actual learning will always be present. I miss a manager or an authority or politicians who say we should close the web and help us with that. We need AI-free spaces where pupils can really learn. But as of now there is no off button because people think new technology is something that cannot be avoided, like gravity.

The teachers’ responses mainly concern assessments of suitability in teaching subjects that will prepare pupils for higher education. They emphasize that there is a fundamental difference between the use of generative AI as a productivity-enhancing tool in the business world and a learning-enhancing tool in the classroom. For example, several teachers expressed frustration that pupils used AI-generated texts to answer school assignments. From this perspective, generative AI can be compared to the use of anabolic steroids in sports: as an artificial performance generation that does not reflect actual performance. This use runs afoul of teachers’ fairness perceptions that the grades they assign should be based on pupils’ true and honest performance limits and not artificially enhanced performance. However, there are nuances in teachers’ views. For example, a marketing teacher was able to explain how generative AI can be used to create advertisements and other informative material. This could be done with the help of image-creating messages in combination with text. Another teacher has emphasized how the pupils could play with different genres: ‘Almost every day I’m in AI with my class. There are so many areas we can explore together. With proper use, there is a lot of good learning here. I don’t want to look at the limitations. I want to look at all the possibilities this digital tool gives us!’

The absence of a unified approach to generative AI in Norwegian education can lead to disparities in pedagogical methods. Some teachers might fully embrace AI as a learning tool, while others may reject it, leading to inconsistent educational experiences and outcomes. The adoption of generative AI in high school teaching is a rapidly evolving process influenced by individual and collective teacher attitudes, school policies, access to technology, and professional support systems. As AI continues to permeate educational contexts, teacher education and support structures must adapt to equip educators with the necessary competencies to harness AI effectively. The adoption of generative AI by high school teachers can be a multifaceted process involving several stages, from initial awareness to full integration into instructional practices. This is an avenue for our further research. Cross-sectional designs capture a snapshot in time, which may not reflect fluctuations or trends that occur over a longer period. Therefore, we will follow up the present study over time as teachers gain more experience in using the school-specific chatbot. As we cannot claim that our sample is fully representative – despite the breadth of Oslo high school teachers who participated – we cannot draw final conclusions. Purposive sampling, which we used, can be very useful in situations where researchers need to reach a targeted sample. While this method can provide valuable insights, it also introduces a possible selection bias, as the sample may not be representative of the entire population. As the response rate is so high, the risk of selection bias is less. Nevertheless, we cannot claim that the sample is representative. The results obtained from purposive sampling cannot be generalized to the whole population due to this possible inherent bias. Although the participants answered anonymously, their responses may still have been affected by social desirability bias or misunderstanding (some) of the questions. Despite these limitations, our study can be useful for teachers, managers, and educational authorities as it provides valuable insights into the very early experiences with a school-specific chatbot. It can also inform future research.

Limitations

The AI strategy from the local authorities was unveiled on August 15, 2023, right before the start of the new school year. By the time the data was collected, the technology was available to both teachers and students for a period of 11 months. The local school authorities had clarified their expectations around two months prior. Our communication with the principals has made it clear that they are cognizant of these expectations. Each high school has been provided with resources including a reduced teaching load to school mentors to facilitate the chatbot's implementation. However, the discussion around management's engagement, which surfaced 6-8 weeks post the AI technology's introduction, raises questions regarding the practicality of developing a well-rounded implementation strategy and providing sufficient support for the teachers within such a short span. This serves as a limitation of our study.

This study also has limitations based on the chosen method. A cross-sectional questionnaire study that uses SEM can provide valuable insights, but it also has several limitations; one of the most important is the inability to infer causality from cross-sectional data. SEM models often depict causal processes, but in the absence of temporal precedence, it is difficult to draw definitive conclusions about cause-and-effect relationships. SEM results are only as good as the theoretical model that underpins them. If a model is incorrectly specified, then the findings may reflect this error rather than an accurate relationship between variables. Unmeasured variables can affect both exogenous and endogenous variables. Low pathways can signal that the theoretical model may need to be reassessed. SEM is based on the entire model fit and not only on individual pathways. Therefore, low pathways should be interpreted within the context of the overall model fit indices (rmsea, cfi, and tli) and the theoretical framework guiding the research. Our structural model has a good fit, so even weak paths offer insights into the relationships being examined, though they may not be as influential as stronger ones. While we are confident in our model specification, we acknowledge the need to include more variables in our next step.

Our conclusions are based on statistical material as well as statements written by teachers in the forms they have filled out. Since all information is conveyed anonymously, we do not see any incentives for teachers to provide misleading information, but this combination of quantitative and qualitative data still might harbor potential for disputable conclusions. We envision that our follow-up studies and other researchers' studies will contribute to a more truthful understanding of the complex encounter between teachers and AI. Thus, our research contribution serves as an avenue for further research.

Funding

The authors declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Acemoglu, D., & Lensman, T. (2023). Regulating transformative technologies (Working Paper No. 31461). National Bureau of Economic Research. https://www.nber.org/papers/w31461 https://doi.org/10.3386/w31461

Adiguzel, T., Kaya, M. H., & Cansu, F. K. (2023). Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemporary Educational Technology, 15(3). https://doi.org/10.30935/cedtech/13152

AlBadarin, Y., Tukiainen, M., Saqr, M., & Pope, N. (2023). A systematic literature review of empirical research on ChatGPT in education. SSRN. https://doi.org/10.2139/ssrn.4562771

Bahroun, Z., Anane, C., Ahmed, V., & Zacca, A. (2023). Transforming education: A comprehensive review of generative artificial intelligence in educational settings through bibliometric and content analysis. Sustainability, 15(17). https://doi.org/10.3390/su151712983

Baidoo-Anu, D., & Ansah, L. O. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. Journal of AI, 7(1), 52–62. https://doi.org/10.61969/jai.1337500

Baytak, A. (2023). The acceptance and diffusion of generative artificial intelligence in education: A literature review. Current Perspectives in Educational Research, 6(1), 7–18. https://doi.org/10.46303/cuper.2023.2

Bryk, A., & Schneider, B. (2002). Trust in schools: A core resource for improvement. Russell Sage Foundation.

Bush, T., & Glover, D. (2014). School leadership models: What do we know? School Leadership & Management, 34(5), 553–571. https://doi.org/10.1080/13632434.2014.928680

Buxrud, O. (2023, November 15). Jeg tror ikke folk forstår hvor mye skade ChatGPT gjør [I don't think people understand how much damage ChatGPT is doing]. Nettavisen. https://www.nettavisen.no/norsk-debatt/jeg-tror-ikke-folk-forstar-hvor-mye-skade-chatgpt-gjor/o/5-95-1455635

Camphuijsen, M. K. (2021). Coping with performance expectations: Towards a deeper understanding of variation in school principals’ responses to accountability demands. Educational Assessment, Evaluation and Accountability, 33(3), 427–453. https://doi.org/10.1007/s11092-020-09344-6

Cao, L., & Dede, C. (2023). Navigating a world of generative AI: Suggestions for educators. Harvard Graduate School of Education. https://bpb-us-e1.wpmucdn.com/websites.harvard.edu/dist/a/108/files/2023/08/Cao_Dede_final_8.4.23.pdf

Celik, I., Dindar, M., Muukkonen, H., & Järvelä, S. (2022). The promises and challenges of artificial intelligence for teachers: A systematic review of research. TechTrends, 66, 616–630. https://doi.org/10.1007/s11528-022-00715-y

Chang, C. H., & Kidman, G. (2023). The rise of generative artificial intelligence (AI) language models—challenges and opportunities for geographical and environmental education. International Research in Geographical and Environmental Education, 32(2), 85–89. https://doi.org/10.1080/10382046.2023.2194036

Chaudhry, I. S., Sarwary, S. A. M., El Refae, G. A., & Chabchoub, H. (2023). Time to revisit existing student’s performance evaluation approach in higher education sector in a new era of ChatGPT: A case study. Cogent Education, 10(1). https://doi.org/10.1080/2331186X.2023.2210461

Cotton, D. R., Cotton, P. A., & Shipway, J. R. (2024). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 61(2), 228-239. https://doi.org/10.1080/14703297.2023.2190148

Dell’Acqua, F., McFowland, E., Mollick, E. R., Lifshitz-Assaf, H., Kellogg, K., Rajendran, S., Krayer, L., Candelon, F., & Lakhani, K. R. (2023). Navigating the jagged technological frontier: Field experimental evidence of the effects of AI on knowledge worker productivity and quality. Harvard Business School Technology & Operations Management Unit Working Paper No. 24-013. https://doi.org/10.2139/ssrn.4573321

Elstad, E. (2016). Why is there a wedge between the promise of educational technology and the experiences in a technology-rich Pioneer School? In E. Elstad (Ed.), Digital expectations and experiences in education (pp. 77–96). Sense Publishers. https://doi.org/10.1007/978-94-6300-648-4_5

Elstad, E. (2023). Læreren møter ChatGPT. [The teacher meets ChatGPT]. Universitetsforlaget.

Farrokhnia, M., Banihashem, S. K., Noroozi, O., & Wals, A. (2023). A SWOT analysis of ChatGPT: Implications for educational practice and research. Innovations in Education and Teaching International. https://doi.org/10.1080/14703297.2023.2195846

Fernández-Batanero, J. M., Montenegro-Rueda, M., Fernández-Cerero, J., & García-Martínez, I. (2022). Digital competences for teacher professional development: Systematic review. European Journal of Teacher Education, 45(4), 513–531. https://doi.org/10.1080/02619768.2020.1827389

Fischer G. (2023). A Research Framework Focused on AI and Humans instead of AI versus Humans, Interaction Design & Architecture(s). IxD&A – Interaction Design and Architecture(s) Journal, 59(1), 17–36. https://doi.org/10.55612/s-5002-059-001sp

Guarte, J. M., & Barrios, E. B. (2006). Estimation under purposive sampling. Communications in Statistics-Simulation and Computation, 35(2), 277–284. https://doi.org/10.1080/03610910600591610

Haladyna, T. M., & Rodriguez, M. C. (2013). Developing and validating test items. Routledge. https://doi.org/10.4324/9780203850381

Holmes, W., & Tuomi, I. (2022). State of the art and practice in AI in education. European Journal of Education, 57(4), 542–570. https://doi.org/10.1111/ejed.12533

Holzberger, D., Philipp, A., & Kunter, M. (2013). How teachers' self-efficacy is related to instructional quality: A longitudinal analysis. Journal of Educational Psychology, 105(3), 774–786. https://doi.org/10.1037/a0032198

Hopfenbeck, T.N., Zhang, Z., Sun, S. Z., Robertson, P., & McGrane, J. A. (2023). Challenges and opportunities for classroom-based formative assessment and AI: a perspective article. Frontiers in Education, 8. https://doi.org/10.3389/feduc.2023.1270700

Ingebretsen, T. (2023, August 15.). Arendalsuka 2023: Kunstig intelligens - skolens digitale dilemma [Arendal week 2023: Artificial intelligence – the school's digital dilemma]. Retrieved from https://www.facebook.com/Osloskolen/videos/1927546080954640

Klassen, R. M., & Chiu, M. M. (2010). Effects on teachers' self-efficacy and job satisfaction: Teacher gender, years of experience, and job stress. Journal of Educational Psychology, 102(3), 741–756. https://psycnet.apa.org/doi/10.1037/a0019237

Kline, R. B. (2023). Principles and practice of structural equation modeling. Guilford Publications.

Kocoń, J., Cichecki, I., Kaszyca, O., Kochanek, M., Szydło, D.,Baran, J., Bielaniewicz,J., Gruza, M., Janz, A., Kanclerz, K., Kocoń, A., Koptyra, B., Mieleszczenko-Kowszewicz, W., Miłkowski, P., Oleksy, M., Piasecki,M. Radliński, Ł., Wojtasik, K., Woźniak, S. & Kazienko, P. (2023). ChatGPT: Jack of all trades, master of none. Information Fusion, 99. https://doi.org/10.1016/j.inffus.2023.101861

Kohnke, L. (2023). L2 learners' perceptions of a chatbot as a potential independent language learning tool. International Journal of Mobile Learning and Organisation, 17(1–2), 214–226. https://doi.org/10.1504/ijmlo.2023.10053355

Krumsvik, R. (2023). Digital kompetanse i KI-samfunnet. [Digital competence in the AI society]. Cappelen Damm Akademisk.

Kuhail, M. A., Alturki, N., Alramlawi, S. & Alhejori, K. (2023). Interacting with educational chatbots: A systematic review. Education and Information Technologies, 28, 973–1018. https://doi.org/10.1007/s10639-022-11177-3

Larsen, E., Møller, J., & Jensen, R. (2020). Constructions of professionalism and the democratic mandate in education: A discourse analysis of Norwegian public policy documents. Journal of Education Policy, 37 (1), 1–20. https://doi.org/10.1080/02680939.2020.1774807

Lawler, E. E., & Suttle, J. L. (1973). Expectancy theory and job behavior. Organizational Behavior and Human Performance, 9(3), 482–503. https://doi.org/10.1016/0030-5073(73)90066-4

Lien, T. (2024, January 8). Private email to the first author.

Lo, C. K. (2023). What is the impact of ChatGPT on education? A rapid review of the literature. Education Sciences, 13(4). https://doi.org/10.3390/educsci13040410

Lortie, D. C. (2020). Schoolteacher: A sociological study. University of Chicago Press.

Ministry of Education and Research & Association of Municipalities. (2023). Strategi for digital kompetanse og infrastruktur i barnehage og skole [Strategy for digital competence and infrastructure in kindergartens and schools]. Retrieved from https://www.regjeringen.no/no/dokumenter/strategi-for-digital-kompetanse-og-infrastruktur-i-barnehage-og-skole/id2972254/?ch=8

Moltudal, S., Høydal, K., & Krumsvik, R. J. (2020). Glimpses Into Real-Life Introduction of Adaptive Learning Technology: A Mixed Methods Research Approach to Personalised Pupil Learning, Designs for Learning, 12(1), 13–28. https://doi.org/10.16993/dfl.138

Moltudal, S. H., Krumsvik, R. J., & Høydal, K. L. (2022). Adaptive Learning Technology in Primary Education: Implications for Professional Teacher Knowledge and Classroom Management. Frontiers in Education, 7. https://doi.org/10.3389/feduc.2022.830536

Montenegro-Rueda, M., Fernández-Cerero, J., Fernández-Batanero, J.M., & López-Meneses, E. (2023). Impact of the implementation of ChatGPT in education: A systematic review. Computers, 12(8), 153. https://doi.org/10.3390/computers12080153

Nagelhout, R. (2023). Academic resilience in a world of artificial intelligence: Guidance for educators as generative AI changes the classroom and the world at large. Harvard Graduate School of Education. Retrieved from https://www.gse.harvard.edu/ideas/usable-knowledge/23/08/academic-resilience-world-artificial-intelligence

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., & Qiao, M. S. (2021). Conceptualizing AI literacy: An exploratory review. Computers and Education: Artificial Intelligence, 2. https://doi.org/10.1016/j.caeai.2021.100041

Niemi, H., Pea, R.D., Lu, Y. (2023). Introduction to AI in Learning: Designing the Future. In: Niemi, H., Pea, R.D., Lu, Y. (eds) AI in Learning: Designing the Future. Springer. https://doi.org/10.1007/978-3-031-09687-7_1

Noys, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. Science, 6654(381), 187–192. https://doi.org/10.1126/science.adh2586

Osloskolen. (2024). Internal message to schools in the Municipality of Oslo.

Osvold, K. B. (2023). Det blir flere og flere robottekster i skolen. Det er en katastrofe. [There are more and more robot texts in school. It's a disaster]. Aftenposten. Retrieved from https://e-avis.aftenposten.no/titles/aftenposten/1445/publications/91797/pages/26/articles/1930442/27/1

Pagano, A., Mørch, A., Santa Barletta,V., & Andersen, R. (2023). Preface: Special Issue: AI for Humans and Humans for AI: Towards Cultures of Participation in the Digital Age. IxD&A – Interaction Design and Architecture(s) Journal, 59(1), 5-16. https://doi.org/10.55612/s-5002-059-001psi

Pavlik, J. V. (2023). Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education. Journalism & Mass Communication Educator, 78(1), 84–93. https://doi.org/10.1177/10776958221149577

Skaalvik, E. M., & Skaalvik, S. (2007). Dimensions of teacher self-efficacy and relations with strain factors, perceived collective teacher efficacy, and teacher burnout. Journal of Educational Psychology, 99(3), 611–625. https://doi.org/10.1037/0022-0663.99.3.611

Strzelecki, A. (2023). To use or not to use ChatGPT in higher education? A study of students' acceptance and use of technology. Interactive Learning Environments. https://doi.org/10.1080/10494820.2023.2209881

Sullivan, M., Kelly, A., & McLaughlan, P. (2023). ChatGPT in higher education: Considerations for academic integrity and student learning. Journal of Applied Learning and Teaching, 6(1). https://doi.org/10.37074/jalt.2023.6.1.17

Sung, G., Guillain, L., & Schneider, B. (2023). Can AI help teachers write higher quality feedback? Lessons learned from using the GPT-3 engine in a makerspace course. In: P. Blikstein, J. Van Aalst, R. Kizito, & K. Brennan (Eds.), Proceedings of the 17th International Conference of the Learning Sciences - ICLS 2023 (pp. 2093–2094). International Society of the Learning Sciences. https://doi.org/10.22318/icls2023.904961

Susnjak, T. (2022). ChatGPT: The end of online exam integrity? arXiv (preprint). https://doi.org/10.48550/arXiv.2212.09292

The Norwegian Directorate for Education and Training. (2023). Kompetansepakke om kunstig intelligens i skolen [Competence package on artificial intelligence in schools]. Retrieved from https://www.udir.no/kvalitet-og-kompetanse/digitalisering/kompetansepakke-om-kunstig-intelligens-i-skolen/

Tschannen-Moran, M., Hoy, A. W., & Hoy, W. K. (1998). Teacher efficacy: Its meaning and measure. Review of Educational Research, 68(2), 202–248. https://doi.org/10.2307/1170754

Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157–178. https://doi.org/10.2307/41410412

Wathne, M. (2023). Bare legg ned hele skolen! [Just shut down the whole school!]. Stavanger Aftenblad. Retrieved from https://www.aftenbladet.no/meninger/debatt/i/bgPXzl/bare-legg-ned-hele-skolen?fbclid=IwAR3uJIlAB37nLJtT4KdMLHrl0ls2FrBjSG0Dcgp8ILJ9TapOQH0XQS31kCY

Yilmaz, R., & Yilmaz, F. G. K. (2023). The effect of generative artificial intelligence (AI)-based tool use on students' computational thinking skills, programming self-efficacy, and motivation. Computers and Education: Artificial Intelligence, 4. https://doi.org/10.1016/j.caeai.2023.100147

Zhong, T., Zhu, G., Hou, C., Wang, Y., & Fan, X. (2023, September 26). The Influences of ChatGPT on Undergraduate Students’ Perceived and Demonstrated Interdisciplinary Learning. edarXiv (preprint). https://doi.org/10.35542/osf.io/nr3gj