School of Applied Educational Sciences and Teacher Education University of Eastern Finland, Joensuu

Email: teemu.valtonen@uef.fi

School of Applied Educational Sciences and Teacher Education University of Eastern Finland, Joensuu

School of Applied Educational Sciences and Teacher Education University of Eastern Finland, Savonlinna

School of Applied Educational Sciences and Teacher Education University of Eastern Finland, Joensuu

Future skills, so-called 21st century skills, emphasise collaboration, creativity, critical thinking, problem-solving and especially ICT skills (Voogt & Roblin, 2012). Teachers have to be able to use various pedagogical approaches and ICT in order to support the development of their students’ 21st century skills (Voogt & Roblin, 2012). These skills, particularly ICT skills, pose challenges for teachers and teacher education. This paper focuses on developing an instrument for measuring pre-service teachers’ knowledge related to ICT in the context of 21st century skills.

Technological Pedagogical Content Knowledge (TPACK; Mishra & Kohler, 2006) was used as a theoretical framework for designing the instrument. While the TPACK framework is actively used, the instruments used to measure it have proven challenging. This paper outlines the results of the development process of the TPACK-21 instrument. A new assessment instrument was compiled and tested on pre-service teachers in Study1 (N=94). Based on these results, the instrument was further developed and tested in Study2 (N=267). The data of both studies were analysed using multiple quantitative methods in order to evaluate the psychometric properties of the instruments. The results provide insight into the challenges of the development process itself and also suggest new solutions to overcome these difficulties.

Keywords:TPACK, 21st century skills, measurement instrument

Todays’ working life poses new challenges for educational systems around the world. Schools and teachers have to provide their students with skills for working in multidisciplinary teams with ill-defined problems by making good use of ICT environments. Students have to learn how to find and analyse information in order to create novel products, new knowledge and better services (Silva, 2009; Griffin, Care, & McGaw, 2012). These skills expected in working life are often referred to as 21st century skills (Griffin et al., 2012). There are various definitions of 21st century skills by several organisations (Assessment and Teaching of 21st Century Skills, Partnership for 21st Century Skills, OECD’s Definition and Selection of Competences, and European Union’s Key Competences for Lifelong Learning). Common to all the definitions is an emphasis on skills for collaboration, communication, ICT literacy, social and/or cultural competencies and creativity as well as critical thinking and problem-solving (Voogt & Roblin, 2012; Voogt, Erstad, Dede, & Mishra, 2013). What is also common is the central role of ICT; todays’ and future workers have to be able to take advantage of different ICT tools and applications as a means of work (Voogt & Roblin, 2012; Voogt, Erstad et al., 2013).

In order to provide their students with 21st century skills, teachers need to be able to use various pedagogical approaches with appropriate ICT applications (Voogt, Erstad et al., 2013). These expectations affect teachers’ work, and, consequently, teacher education when, in addition to more traditional contents and skills such as mathematics and literature, 21st century skills as more generic skills need to be integrated into education as a means and goals of learning (c.f. Rotherham & Willingham, 2009; Silva, 2009). Despite of the hypothesis that the current pre-service teacher generation has grown up as a “Net Generation” (Prensky, 2001), it seems that today’s pre-service teachers need support in developing their skills to use ICT for teaching and learning (see Lei, 2009). Not surprisingly, it seems that growing up surrounded by electronic gadgets and being an active user of various ICT applications do not by themselves prepare a “Digital Native” to be a successful 21st century teacher. Pre-service teachers seem to have difficulties in both understanding the pedagogical potential of various everyday technologies (Valtonen, Pöntinen, Kukkonen, Dillon, Väisänen, & Hacklin, 2011; Lei, 2009) and the connection between the use of ICT and the development of 21st century skills.

In this paper, we report the results of the design and evaluation process of creating a Technological Pedagogical Content Knowledge (TPACK; see Mishra & Koehler, 2006) questionnaire, which is psychometrically sound and pedagogically grounded on 21st century skills. This revised TPACK questionnaire is designed particularly for pre-service teachers.

Theoretical Background

The TPACK framework was developed for studying and describing knowledge related to teachers’ use of ICT in education (Mishra & Koehler, 2006; Koehler, Mishra, & Cain, 2013). TPACK consists of three areas of knowledge: technology, pedagogy and content, and the combination of these is presented in Table 1.

| Area of measurement | Acronym | Explanation |

| Content knowledge | CK | Central theories and concepts of the field with knowledge including the nature of the knowledge and means of inquiry. |

| Pedagogical knowledge | PK | Knowing the processes and mechanisms of learning and ways to support and guide students’ learning process. |

| Technology knowledge | TK | Knowing the possibilities and constraints of different technologies and abilities to use technologies available. Also, technology knowledge refers to the interest regarding the development of new technologies. |

| Pedagogical content knowledge | PCK | How teacher can facilitate certain students learning of certain contents, what kind of learning environments, activities, collaboration etc. are needed. |

| Technological pedagogical knowledge | TPK | Knowledge of how different pedagogical approaches can be supported with different technologies. TPK refer to a general knowledge concerning the possibilities of technology in education |

| Technological content knowledge | TCK | Knowledge of how technology is used within certain discipline like math or history |

TPACK is a combination of different elements defined as “an understanding that emerges from interactions among content, pedagogy, and technology knowledge [...] underlying truly meaningful and deeply skilled teaching with technology” (Koehler et al., 2013, p. 66). We assume that teachers with high-level TPACK are more capable of choosing appropriate pedagogical approaches for supporting students’ learning of certain content and the related 21st century skills and, furthermore, actively take advantage of appropriate technologies to support these learning processes.

Features of Previous TPACK Measurement Instruments

The TPACK framework has been actively used since it was first introduced (see Voogt, Fisser, Roblin, Tondeur, & Braakt, 2013), and several instruments have been designed for measuring it (see Schmidt, Baran, Thompson, Mishra, Koehler, & Shin, 2009; Koh & Sing., 2011; Lee & Tsai., 2010). However, two main challenges related to the available instruments remains—namely, psychometric features and the nature of pedagogical knowledge.

Firstly, some difficulties in the psychometric features of the instruments were caused by the assumption that TPACK consists of seven different areas as separate factors. According to Graham (2011), the separation of these seven areas into independent factors is difficult. These psychometric properties of the instruments have mainly been studied with explorative factor analysis (EFA), including principal component analysis (PCA), although confirmatory factor analysis (CFA) and different approaches with structural equation models (SEMs) have also been used. One of the earliest and still actively used questionnaires for measuring TPACK is the Survey of Preservice Teachers’ Knowledge of Teaching and Technology (SPTKTT) by Schmidt et al. (2009). Despite its active use, SPTKTT has attracted criticism based on the process of validation with principal component analysis (PCA) conducted separately for each of the seven areas of TPACK (see Chai, Koh, Tsai, & Tan, 2011). Altogether, the psychometric challenges have particularly focused on how the constructs (factors) of TPACK are formed. In other words, the combination of separate elements in the constructs has varied between studies.

In a study reported by Chai et al. (2011), only five out of seven elements of TPACK were found, excluding the TCK and PCK. Koh, Chai and Tsai, (2010) reported five factor model aligning with the TPACK framework. In the study by Archambault and Barnett (2010), the only factor emerging as expected was TK, while all the rest of the six factors were combined as three factors. Interestingly, Pamuk, Ergun, Cakir, Yilmaz and Ayas (2013) reported a study where all seven elements of TPACK separately aligned with the TPACK framework. However, this study was challenging because the pedagogical aspects of the questionnaire and the methods used in this study were unclear.

The second challenge with the available instruments concerns the nature of pedagogical knowledge. According to Brantley-Dias and Ertmer (2013), the TPACK framework can be described as a pedagogically free frame that can be used for different pedagogical approaches. This feature of TPACK can be clearly seen within different questionnaires designed for measuring TPACK. For example, the SPTKTT questionnaire by Schmidt et al. (2009) focuses on pedagogical themes on a very general level, such as “I know how to assess student performance in a classroom”, “I can adapt my teaching based upon what students currently understand or do not understand” and “I know how to organize and maintain classroom management”. Yet, there have been attempts to explicate pedagogical knowledge as in the questionnaire by Koh and Sing (2011). In their study, the statements in the questionnaire concerning pedagogical knowledge were built on the theory of meaningful learning by Jonassen et al. (1999). This approach highlights students’ active role in their own learning and group activities, and it was implemented in terms of the following statements: “I am able to help my students to monitor their own learning”, “I am able to help my students to reflect on their learning strategies” and “I am able to plan group activities for my students”.

The role of pedagogical knowledge has been indicated as a central factor affecting the success of using ICT in education (c.f. Ertmer, 2009; Guerrero, 2005). In the case of 21st century skills, there is not necessarily anything new—rather newly important compilations of different skills (Silva, 2009). Yet, Voogt, Erstad et al. (2013) argue that teachers must know various pedagogical approaches and ways to take advantage of ICT for supporting the development of students’ 21st century skills. From this perspective, we assume that it is important that questionnaires measuring TPACK need to be pedagogically well-grounded for measuring teachers’ certain pedagogical approaches—in this case, pedagogical approaches aligning with 21st century skills.

Developing a New Measurement Instrument According to Pedagogical Approaches that Support 21st Century Skills

In the development process, we investigated the new instrument in two phases (Study1 and Study2). The main focus of this process was to design a psychometrically sound TPACK measurement instrument whose pedagogical practices align with 21st century skills, especially in the teacher education context. For this purpose, three starting points were used. Firstly, the questionnaire by Koh and Sing (2011) provided an example of how to better implement pedagogical knowledge in a certain well-studied theory of learning. Secondly, the pedagogical perspectives of 21st century skills were seen as a basis for defining the pedagogical goals that this questionnaire aimed to measure. While pedagogical goals were based on different definitions of 21st century skills (Voogt, Erstad et al., 2013; Voogt & Roblin, 2012; Mishra & Kereluik, 2011), they ended up stressing collaborative learning practices with an emphasis on skills for creative and critical thinking, skills for learning and self-regulation and skills for collaborative problem-solving. These themes are related to all categories of pedagogical knowledge (PK, PCK, TPK and TPACK).

Thirdly, in areas of PCK and TPK, we included statements that are similar to the statements in the questionnaire by Schmidt et al. (2009). The reason for including previously tested elements was that we wanted to have a reference between editions of subscales and an additional safety line if the editions were to demonstrate inadequate properties. We considered this important as in the early stages of the measurement instrument development, it was not clear how these statements would influence the structure of the instrument combined with the new statements focusing on 21st century skills.

Items related to content knowledge were divided into two parts. First, there are statements measuring content knowledge aligning with the questionnaire by Schmidt et al. (2009). These statements measure how highly pre-service teachers assess their knowledge related to the more traditionally understood content knowledge of, for example, history, mathematics or literature. In this case, the selected content was science. In addition to these, there were statements for measuring how well pre-service teachers know the principles and mechanisms of 21st century skills, such as collaboration, critical thinking, problem solving etc. (Voogt, Erstad et al., 2013). We argue that in addition to knowledge related to traditional subject content such as mathematics and literature, todays’ students and especially teachers should be aware of the practices and mechanisms of the 21st century skills as a new area of content knowledge to be gained at school.

TCK strongly stresses the idea of how ICT is used within different disciplines outside schools in working life—that is, how professionals of science or mathematics are taking advantage of the ICT in their work. In the study by Schmidt et al. (2009), this area contained only one statement for each content area: “I know about technology that I can use for understanding and doing [different content]”. This is taken into account when building a TCK subscale for different content areas and with a stronger set of items.

Technological knowledge (TK) in this instrument contains two areas that focus on the general level of teachers’ interest and skills in using ICT and current technology in education. Because of the fast development of ICT, the questionnaire contains general-level statements focusing on respondents’ interest in the development of ICT and their ability to take advantage of various ICT applications, as in the questionnaire by Schmidt et al. (2009). In order to gain a more precise picture of the respondents’ interest in the development of ICT, items measuring their knowledge of currently popular ICT applications in education; social software, tablet computers, touch boards and the production of online materials were added.

According to Chai, Koh and Tsai (2010), pre-service teachers’ underdeveloped professional knowledge causes difficulties in acknowledging and distinguishing between the elements of TPACK. Similar to Roblyer and Doering (2010) and Doering, Veletsianos, Scharber and Miller (2009), we suggest that TPACK should be considered a metacognitive tool for enhancing technology integration into teaching and learning to help understand how different knowledge areas work in tandem and to identify personal strengths and developmental areas. For this reason and in addition to mere research purposes, the questionnaire was designed as a tool for opening the TPACK framework for teachers. The questionnaire consists of seven sections aligning with the elements of the TPACK, and at the beginning of each section, scaffolding texts were provided to briefly outline the core of each area of TPACK. In this way, we assume, the respondents might become aware of TPACK’s theoretical framework as well as the structure of the TPACK framework, and this activity could trigger respondents’ thinking of different TPACK elements and thus support their reflective thinking and professional development. The following is an example of a piece of scaffolding text for TPK, which was preceded by TK, PK and CK:

Ok, now you have assessed your content knowledge, technological knowledge and pedagogical knowledge. Next, think about combining these areas; think about your knowledge concerning how well you know how to use different ICT applications for supporting your pedagogical aims and pedagogical practices. In which areas do you find your knowledge strong and where do you think you need more knowledge?

In addition to the pedagogical grounding, we focused on the psychometric properties of the new measurement instrument and specifically on reliability and validity. Reliability refers to the reproducibility or consistency of measurement scores, and while different types of reliability exist, we focused on the internal consistency referring to the degree to which responses on a measurement are similar to one another (e.g. AERA, APA, NCME, 2014). Validity refers to “the degree to which evidence and theory support the interpretations of test scores entailed by proposed uses of tests[…] the process of validation involves accumulating evidence to provide a sound scientific basis for the proposed score interpretations” (AERA, APA, NCME, 2014, p. 11). Generally, validity is a complex and multidimensional aspect of measurement instrument development, and different aspects of validity exist. In this study, we investigated the initial construct validity of the new instrument. Therefore, the purpose of this study was also to separately investigate the developed instrument with independent samples. We have named these studies Study1, which was the pilot phase of the investigation, and Study2, which was the phase used to investigate the structure more thoroughly.

Participants

Two sub-studies were carried out in this paper: Study1 and Study2. Both studies had target groups of first-year (novice) pre-services teachers (pre-service teacher education is university education in Finland). After graduation, these pre-service teachers will mainly work as classroom teachers, which in the Finnish basic education system means grades 1 to 6 (pupils aged 7 to 12). Study1 included 96 pre-service teachers from one Finnish university (NFEMALE = 59; NMALE = 34), of which 63% were female. In Study2, the sample size was 267 pre-service teachers from three different universities in Finland (NFEMALE = 203; NMALE = 64), of which 76% were female. There was a slight gender difference between these two independent samples although both samples adequately followed the general gender distribution of Finnish classroom pre-service teacher education, which is dominated by women.

Data Collection

In both phases (i.e. for Study1 and Study2), data collection was conducted as a part of the pre-service teachers’ courses. The aim of the study was explained to the target groups. Pre-service teachers were not obliged to take part in the study. Furthermore, permission for conducting the study was acquired from the head of the department of the teacher education units. Study1’s data collection was conducted in the spring of 2013 and Study2 data collection was conducted in late autumn of 2014 and early spring of 2015. The data collections were administered with online questionnaires. In both phases (i.e. Study1 and Study2), the questionnaire was designed to serve research purposes as well as a reflective thinking tool for ICT in teaching and learning for pre-service teachers, thus supporting the learning goals of the courses.

Data Analysis

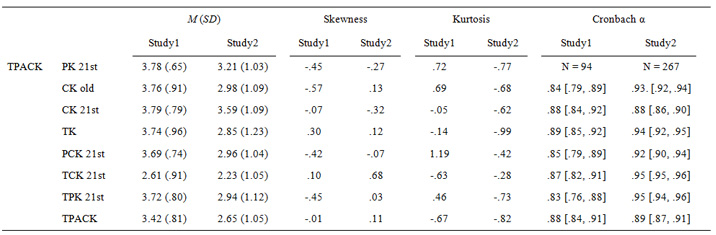

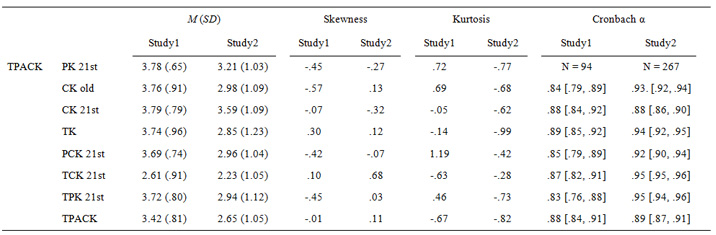

SPSS v22 was used in the statistical analysis of both studies. We used descriptive statistics (M, SD, skewness, kurtosis), internal consistency (for Study1 and Study2) and exploratory factor analysis for the initial factor structure investigation of the developed TPACK-21 instrument for Study2. Internal consistency was estimated with Cronbach’s alpha (α) and its associated 95% confidence intervals. A well-structured scale and its items should have an alpha level of 0.7 or higher (e.g. Nunnally, 1978), indicating a good combination of observed item responses and a reliable scale. Furthermore, we estimated the 95% confidence intervals for the α coefficients in order to evaluate the precision of the point estimates—that is, the plausible range of α estimate with several replications of the analysis. We hypothesised that the α-level of Study2 would fall into the range or should be higher compared to the Study1 α and its confidence intervals, indicating the sufficient development of the TPACK-21 instrument.

To evaluate the validity of TPACK-21, we used two independent data sets (Study1 and Study2) to test its initial structure. When the theoretical factor structure can be proven with independent empirical data, this can be considered as initial evidence for the validity of TPACK-21. This was investigated with the exploratory factor analysis (EFA) principal axis factoring (PAF) method with oblique (direct oblimin) rotation on Study2 data. Oblique rotation was used because we expected the factors to be correlated. Extraction was based on eigenvalues greater than 1. The following criteria were used to interpret the results of EFA PAF: Kaiser-Meyer-Olkin (KMO), Bartlett’s Test of Sphericity, eigenvalues, scree-plot, amount of variance explained and rotated factor loading. We expect the KMO to reach a value of .9 to indicate distinct, reliable factors and adequate sample size. Further, Bartlett’s test should be significant, indicating that the correlations between variables are significantly different from zero and eigenvalues higher than one to indicate an individual factor accompanied, which can be confirmed by viewing scree plot.

TPACK-21 Instrument (Study1)

The designing of the updated TPACK measurement instrument began by reviewing the earlier questionnaires of TPACK. Based on the review, the first version of the instrument was built. Five experts in the area of ICT in teaching and learning were asked to read and comment on the developed instrument (face validity). The subscales of Study1 were PK, CK (Content/ subject area being Science), TK, PCK, TCK, TPK and TPACK. In addition, each of the subscales (except TCK) were divided into two: TPACK statements following the Schmidt et al. (2009) questionnaire and newly added 21st century skills self-evaluating TPACK statements. Finally, a measurement instrument with 13 subscales and 86 items rated on a six-point Likert-type scale (1 = I need a lot of additional information about the topic; 2 = I need some additional information about the topic; 3 = I need a little additional information about the topic; 4 = I have some information about the topic; 5 = I have good knowledge about the topic; 6 = I have strong knowledge about the topic) was designed. Examples of these 21st century skills self-evaluating TPACK statements are as follows: PK—Guiding students’ discussions during group work (2–5 students), CK—Principles of collaborative learning, TK—Familiarity with new technologies and their features, PCK—In teaching natural sciences, I know how to guide students’ content-related problem solving in groups (2–5 students), TPK—In teaching, I know how to make use of ICT as a medium for sharing ideas and thinking together and TCK—I know technologies which I can use to illustrate difficult contents in natural sciences.

Results of Study1

The aim of Study1 was specifically to test the reliability and initial validity of the newly developed TPACK-21 instrument. In Table 2, we present the mean (M), standard deviation (SD), skewness, kurtosis and internal consistencies (Cronbach α) of the TPACK-21 instrument for both studies (Study1 and Study2) in order to facilitate the comparison of the instruments. The alphas for Study1 were adequate at α = .83-.89 (α < .07 preferably < .8), indicating the initial reliability of the TPACK-21 instrument (Table 2). Moreover, the CI’s of alpha were adequate, indicating that if these analyses were to be performed repeatedly, the alpha level would remain adequate. The mean value of Study1 indicates that respondents had or needed only little information about the TPACK-21 areas, except with TCK (M = 2.61, SD = .91), in which respondents seemed to need more information (response options varied from 1 to 6).

Table 2 Mean (M), Standard Deviation (SD), skewness, kurstosis, and Internal Consistency [95% CI] (scale 1= I need a lot of additional information about the topic; 6 = I have strong knowledge about the topic)

Modifications of the TPACK-21 Instrument for Study2

The results from Study1 indicated adequate to good internal consistency of subscales measured by Cronbach’s alpha. Still, based on various analyses, there were challenges with the instrument that demanded further development for Study2. First, the structure of the Study1 measurement did not follow the TPACK framework. Based on these results, all statements were carefully re-evaluated in order to find possible difficult wordings, challenging concepts and double statements (two statements in one statement). When these difficult statements were removed, the structure became more observable. Also, some statements were re-written in order to be tested in Study2.

Second, some concepts used in the Study1 questionnaire caused difficulties for respondents. Statements focusing on critical thinking and creative thinking correlated strongly (Pearson’s r over .70). We assume that the reason for this was that first-year pre-service teachers had difficulties in separating these areas in the learning context. Again, some statements were redefined for the second study. Also, it seemed that concepts such as reflection, collaborative problem-solving and creative and critical thinking were difficult for the first-year pre-service teachers. For this reason, we added a short dictionary to the beginning of the instrument. In this dictionary, we defined the meanings of these concepts (see Appendix 1). We assume that in addition to mere research purposes, this instrument needs to serve as a tool for supporting pre-service teachers’ professional development and reflective thinking.

Third, based on mean values and skewed distribution of results, it seems that some statements were too easy for the respondents. This was especially visible in the TK section where respondents evaluated their knowledge related to the use of separate ICT application as very high. In order to avoid the ceiling effect, some statements were made more difficult. For example, the statement I can use social software for teaching was changed to I am an expert on using social software for teaching. These changes were made in order to better take into consideration the character of today’s pre-service teachers’ knowledge and also to support their reflective thinking and professional development. The new questionnaire included 54 items, some of which were modified, and a dictionary was tested in Study2. The subscale structure was similar to the Study1 version of the TPACK-21 instrument.

Results of Study2

The Exploratory Factor Analysis (EFA) with Principal Axis Factoring (PAF) method with oblique rotation was conducted at the first stage for all 54 items, including the TPACK statements. However, 54 items did not produce an interpretable factor structure as the TPACK statements loaded strongly to other subscale (PK, CK, TK, PCK, TPK and TCK) items. We therefore removed the TPACK items from the second EFA, which produced an adequate initial factor structure with 36 items. A KMO value of 0.93 verified sampling adequacy, and a significant (p <.001) Bartlett’s Test of Sphericity indicated that the variable correlations were different from zero. Seven factors were produced by the eigenvalue (< 1), and this was confirmed by reading the scree plot. Table 3 presents the factor loading after rotation, eigenvalues and percentage of explained variance. The clustered items of EFA indicate that separate factors represent designated factor structures as hypothesised.

|

Rotated Factor Structure |

|||||||

|

Item |

PK21st |

CK old |

CK 21st |

TK |

PCK 21st |

TPK 21st |

TCK 21st |

|

PK1 |

,713 |

||||||

|

PK2 |

,682 |

||||||

|

PK3 |

,861 |

||||||

|

PK4 |

,761 |

||||||

|

PK5 |

,844 |

||||||

|

PK6 |

,809 |

||||||

|

PK7 |

,648 |

||||||

|

CK1 |

,685 |

||||||

|

CK2 |

,882 |

||||||

|

CK3 |

,687 |

||||||

|

CK4 |

,475 |

||||||

|

CK5 |

-,720 |

||||||

|

CK6 |

-,754 |

||||||

|

CK7 |

-,863 |

||||||

|

CK8 |

-,796 |

||||||

|

CK9 |

-,829 |

||||||

|

TK1 |

,796 |

||||||

|

TK2 |

,999 |

||||||

|

TK3 |

,899 |

||||||

|

TK4 |

,677 |

||||||

|

PCK1 |

,847 |

||||||

|

PCK2 |

,815 |

||||||

|

PCK3 |

,822 |

||||||

|

PCK4 |

,769 |

||||||

|

PCK5 |

,816 |

||||||

|

PCK6 |

,701 |

||||||

|

TPK1 |

-,506 |

||||||

|

TPK2 |

-,658 |

||||||

|

TPK3 |

-,938 |

||||||

|

TPK4 |

-,845 |

||||||

|

TPK5 |

-,798 |

||||||

|

TPK6 |

-,697 |

||||||

|

TCK1 |

-,536 |

||||||

|

TCK2 |

-,841 |

||||||

|

TCK3 |

-,832 |

||||||

|

TCK4 |

-,623 |

||||||

|

Eigenvalues |

16.3 |

2.22 |

1.03 |

4.42 |

1.17 |

1.40 |

1.90 |

|

% of variance |

45.28 |

6.15 |

2.85 |

12.27 |

3.25 |

3.87 |

5.27 |

|

Note. Factor loading under .40 cleared from the table. Pedagogical Knowledge PK, Content Knowledge CK, Techological Knowledge TK, Pedagogical Content Knowledge PCK, Tehcnological Pedagogical Knowledge PCK, Techological Content Knowledge TCK. TPACK did not load separately to the EFA. |

|||||||

Table 3 Exploratory Factor Analysis (Principal Axis Factoring) with oblique rotation

The alphas of Study2 ranged from .88 to .95. The alpha levels also increased systematically as expected between sub-studies 1 and 2; however, this information should be interpreted with caution as differences in sample size may influence the increase. The means of Study2 (M varied from 2.23 to 3.59) were systematically lower than expected due to the fact that we aimed to make the items more difficult for respondents to answer in order to form a more realistic picture of pre-service teachers’ TPACK and to obtain more variance and have less skewed/kurtotic data (see Table 2). The results of Study2 indicate that first-year pre-service teachers require more information about the TPACK areas. Generally, the Study1 data were closely normally distributed, and with the development modifications to the TPACK-21 instrument, we were able to obtain normally distributed data from Study2.

The aim of this study was to develop and evaluate a measurement instrument for the assessment of pre-service teachers’ Technological Pedagogical Content Knowledge (TPACK) that aligns with 21st century skills (i.e. TPACK-21). We focused particularly on initial reliability and validity. For this purpose, two sub-studies in two phases, Study1 and Study2, were conducted. Focusing on descriptive statistics, internal consistency and the factor structure, we can conclude that the development and redesigning of the questionnaire has been adequate, and it has improved the quality of the TPACK-21 instrument.

The original TPACK structure proposed by Mishra and Kohler (2006) was found, although the results from the EFA indicate that the TPACK model works only without the actual TPACK subscale. This being the case, should TPACK be considered as a theoretical construct as a sum of its parts, perhaps a higher-order factor structure? The reliability of the factors estimated with Cronbach’s alpha (α) were similar/higher when compared to the results of the earlier studies (see Pamuk et al., 2013; Koh & Sing., 2011; Lee & Tsai., 2010). The result of overlap in α CIs for the TPACK-21 subscales indicates that the reliability in the population may not differ largely between different data sets, as seen in the results of Study1 and Study2.

The changes in mean values for the subscales between Study1 and Study2 indicate that the instrument can be seen as more accurate for separating the respondents with lower and higher TPACK. This is especially important for follow-up studies of TPACK development for first-year pre-service teachers—with limited knowledge and experience of teaching with ICT—to teachers with more experience. Particularly during the re-designing and testing of the TPACK-21 instrument, it was discovered that first-year pre-service teachers’ scaffolding needs to be obvious. Based on this result, we assume that the addition of separate introductory elements for each TPACK subscale with scaffolding text was adequate and helped respondents to better acknowledge the elements of TPACK (c.f. Chai et al., 2010). Certain concepts such as reflection, collaborative problem-solving and creative and critical thinking in the learning context were difficult for first-year pre-service teachers. For this reason, we added a short dictionary to the beginning of the instrument to help with these challenging concepts. Based on the results, we were also able to notice that some statements were too easy for the respondents. This feature could be seen especially in the TK section, where respondents evaluated their knowledge related to the use of separate ICT applications very high. These insights show that designing a TPACK instrument for pre-service teachers is a challenging task. An important question is how this instrument would work with more experienced students—that is, will the instrument need modifications for a follow-up study as these pre-service teachers proceed in their studies?

The results from the EFA provided important insight into the nature of the TPACK itself. Discussion on how different TPACK areas relate to each other, that is, how TPACK should be understood, had already begun with the research on the predecessor of TPACK—that is, pedagogical content knowledge, PCK (see Gess-New Some, 1999). The burning question now is how the entity of seven areas (including TPACK itself) should be understood; should TPACK be considered one single entity or rather as a combination of separate elements (Gess-New Some, 1999; Graham, 2011)? The results from the EFA align with the view that TPACK should be considered rather as a combination of separate elements—a synthesis where six elements form the latent TPACK (i.e. sum of constructs that cannot be directly measured). These questions need further research, for example, by testing the model with Confirmatory Factor Analysis (CFA) techniques.

The aim of this research was not only to create a TPACK instrument for research purposes, but at the same time to create an instrument to trigger reflective thinking and professional development. We assume that this instrument that includes scaffolding elements can be a valuable tool for supporting pre-service teachers’ professional development. Based on previous studies, it seems that today’s pre-service teachers’ skills in using ICT in a pedagogically meaningful manner are limited (Lei, 2009; Valtonen et al., 2011). Therefore, the TPACK-21 instrument was designed to support pre-service teachers’ reflective thinking and, on a larger scale, to emphasise their awareness of their strengths and development needs related to pedagogically meaningful use of ICT in education. This is also an important topic for future studies. Longitudinal research with this instrument will be particularly valuable in shedding light on pre-service teachers’ TPACK development. In addition, the repeated measurements and self-reflection can help pre-service teachers to acknowledge their own development. For future research, pre-service teachers’ experiences with this instrument as a reflective tool is central—that is, how scaffolding with the instrument can support pre-service teachers’ reflective thinking and professional development during teacher education.

Like all research, this study has its limitations. First, the sample included only classroom teachers, although this study can be easily replicated with a wider sample of pre-service teachers. Also, pre-service teachers of different levels of experience (e.g. third-year pre-service teachers) should be included in the research to see whether the initial structure holds. The second limitation is related to the sample representativeness. Although we had a large proportion of teacher education units in Finland, researchers should include more pre-service teacher education units in their future studies. Third, this paper focused on the initial structure of TPACK-21. Future research should include a confirmatory factor analysis and analysis of measurement invariance. In addition, other aspects of validity and reliability should be included in future investigations. Fourth, only the initial evidence of item-level and scale-level properties were investigated. Future research could include more in-depth studies on these properties, such as with Rasch modelling. Fifth, no attempt was made to examine the study results in light of the ability to use or attitudes toward the use of ICT in teaching and learning. Future researchers should include this aspect in their studies as the TPACK construct may be influenced by abilities and attitudes. Sixth, this study did not even scratch the surface of pre-service teachers’ TPACK development during teacher education or in the early years of in-service teaching. Longitudinal research is needed to understand pre-service teachers’ TPACK development more thoroughly.

Acknowledgements

This research presented in this article is supported by the Academy of Finland (Grant No. 273970).

Archambaulta, L., & Barnetta, J. (2010). Revisiting technological pedagogical content knowledge: Exploring the TPACK framework. Computers & Education, 55(4), 1656–1662.

Brantley-Dias, L., & Ertmer, P. (2013). Goldilocks and TPACK: Is the construct “just right?” JRTE, 46(2), 103–128.

Chai, C., Koh, J., & Tsai, C.-C. (2010). Facilitating preservice teachers’ development of technological, pedagogical, and content knowledge (TPACK). Educational Technology & Society, 13(4), 63–73.

Chai, C., Koh, J., Tsai, C., & Tan, L. (2011). Modeling primary school pre-service teachers’ Technological Pedagogical Content Knowledge (TPACK) for meaningful learning with information and communication technology (ICT). Computers & Education, 57(1), 1184–1193.

Doering, A., Veletsianos, G., Scharber, C., & Miller, C. (2009). Using the technological, pedagogical, and content knowledge framework to design online learning environments and professional development. Journal of Educational Computing Research, 41(3), 319–346.

Ertmer, P. (1999) Addressing first- and second-order barriers to change: Strategies for technology integration. Educational Technology Research and Development, 47(4), 47–61.

Gess-Newsome, J. (1999). Pedagogical content knowledge: An introduction and orientation. In J. Gess-Newsome & N. Lederman (Eds.), Examining pedagogical content knowledge (pp. 3–20). Dordrecht: Kluwer.

Graham, C. (2011). Theoretical considerations for understanding technological pedagogical content knowledge (TPACK). Computers & Education, 57(3), 1953–1960.

Griffin, P., Care, E., & McGaw, B. (2012). The changing role of education and schools. In P. Griffin, B. McGaw, & E. Care (Eds.),Assessment and teaching of 21st century skills (pp. 17–66). Heidelberg: Springer.

Guerrero, S. (2005). Teacher knowledge and a new domain of expertise: Pedagogical technology knowledge. Journal of Educational Computing Research, 33(3), 249–267.

Jonassen, D., Peck, K., & Wilson, B. (1999). Learning with technology: A constructivist perspective. Upper Saddle River, NJ: Prentice Hall.

Koehler, M.J., Mishra, P., & Cain, W. (2013). What is technological pedagogical content (TPACK)? Journal of Education, 193(3), 13–19.

Koh, J., Chai, C. S., & Tsai, C. C. (2010). Examining the technological pedagogical content knowledge of Singapore preservice teachers with a large-scale survey. Journal of Computer Assisted Learning, 26(6), 563–573.

Koh, J., & Sing, C. (2011). Modeling pre-service teachers’ technological pedagogical content knowledge (TPACK) perceptions: The influence of demographic factors and TPACK constructs. Proceedings Ascilite 2011 Hobart: Full Paper.

Lee, M., & Tsai, C. (2010). Exploring teachers’ perceived self-efficacy and technological pedagogical content knowledge with respect to educational use of the World Wide Web. Instructional Science, 38(1), 1–21.

Lei, J. (2009). Digital natives as preservice teachers: What technology preparation is needed? Journal of Computing in Teacher Education, 25(3), 87–97.

Mishra, P., & Kereluik, K. (2011). What 21st century learning? A review and a synthesis. In M. Koehler & P. Mishra (Eds.), Proceedings of Society for Information Technology & Teacher Education International Conference 2011 (pp. 3301–3312). Chesapeake, VA: Association for the Advancement of Computing in Education (AACE).

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for integrating technology in teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Nunnally, J. (1978). Psychometric theory (2nd ed.). New York, NY: McGraw-Hill.

Pamuk, S., Ergun, M. Cakir, R., Yilmaz, H. B., & Ayas, C. (2013). Exploring relationships among TPACK components and development of the TPACK instrument. Education and Information Technologies, 20(2), 241–263.

Prensky, M. (2001). Digital natives, digital immigrants. On the Horizon, 9(5), 1–6.

Roblyer, M. D., & Doering, A. H. (2010). Theory and practice: Foundations for effective technology integration. In M. Roblyer & A. Doering (Eds.), Integrating educational technology into teaching (5th ed.) (pp. 31–70). Boston, MA: Allyn & Bacon.

Rotherham, A.J., & Willingham, D. (2009). 21st century skills: The challenges head. Educational Leadership, 67(1), 16–21.

Schmidt, D., Baran, E., Thompson, A., Mishra, P., Koehler, M., & Shin, T. (2009). Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for preservice teachers. JRTE, 42(2), 123–149.

Silva, E. (2009). Measuring skills for 21st-century learning. Phi Delta Kappan, 90(9), 630–634.

Valtonen, T., Pöntinen, S., Kukkonen, J., Dillon, P., Väisänen, P., & Hacklin, S. (2011). Confronting the technological pedagogical knowledge of Finnish net generation student teachers. Technology, Pedagogy and Education, 20(1), 1–16.

Voogt, J., Erstad, O., Dede, C., & Mishra, P. (2013). Challenges to learning and schooling in the digital networked world of the 21st century. Journal of Computer Assisted Learning, 29(5), 403–413.

Voogt, J., Fisser, P., Roblin, N., Tondeur, J., & Braakt, J. (2013). Technological pedagogical content knowledge – a review of the literature. Journal of Computer Assisted Learning, 29(2), 109–121.

Voogt, J., & Roblin, N. P. (2012). A comparative analysis of international frameworks for 21st century competences: Implications for national curriculum policies. Journal of Curriculum Studies, 44(3), 299–321.

_______________________________

Correspondence concerning this article should be addressed to Teemu Valtonen, Philosophical Faculty, School of Applied Educational Science and Teacher Education, University of Eastern Finland, PO Box 111, 80101 Joensuu, Finland. E-mail: teemu.valtonen@uef.fi.