Associate Professor

Norwegian University of Science and Technology University

arvid.staupe@idi.ntnu.no

This article describes testing of the use of alternative exam/evaluation and the use of an ICT learning environment at the Department of Computer and Information Science, Norwegian University of Science and Technology (IDI/NTNU). The use of this particular learning environment is described in a previous article (Staupe, 2010). Thus, this article particularly describes the part which deals with the testing of alternative exam/evaluation formats. The students could choose between three different formats.

1) A 100% traditional exam with optional exercise reports. The exercises were looked over and commented on by those who had chosen the second alternative (13%).

2) A 50% traditional exam, 50% exercise work (10%).

3) Exercise work counted for 100% (77%). In total, 129 students participated.

Keywords: Evaluation, online, alternative, blended learning, learning environment, teaching arrangement, ICT learning, exam format, defection, course completion, choice exam form, exam, self-assessment, build one’s own learning

I had been working for several years on the development of the ICT learning environment, and this article is very much based on the work described in a previous article, Experiences from Blended Learning, Net-based Learning (Staupe, 2010). On the theoretical side of learning, I worked from a hypothesis that it is the active student who learns. This means that it is not enough to passively follow the lectures and make an all out effort before the exam, or to study only occasionally. To me, it was important to come up with a teaching arrangement in which the students focus on not only facts, but they should also be able to produce and create through using subject material. My view on this topic is affected not least by Steen Larsen, professor at Danmark Lærerhøjskole (teacher’s university) (Larsen, 1995). Larsen believes that there is a foundational difference between teachings and learning something. According to Larsen, recent learning theory equates the terms “learning process” and “work process”, and if they are not synonymous, they are at least two sides of the same story.

For this course we made use of a learning environment similar to Experiences from Blended Learning, Net-based Learning, Staupe (2010). Central learning forms were discovery learning, problem-based learning and project-based learning. All these various types of learning are closely related to each other and fall into the category that I choose to call active learning. Discovery learning (http://en.wikipedia.org/wiki/Discovery_learning) follows a learning process built on abstracting, experimenting, observing and reflecting. This is part of a loop known as a discovery loop. It is important that the students learn to articulate and present their reflections.

The course was created according to the principles of problem-based learning (Bjørke, 1996). For this course, we made use of a learning environment that was similar to Staupe (2010), and the course was structured according to the principles of problem-based learning. A hyper system/multimedia system (Jonassen, 2000) (known as the hyper system from now on) for the internet was developed for this course (Warholm, 2000) (Albinussen, 2003) and we used a traditional textbook. One part of the material only existed in the hyper system, e.g. animations. The learning environment consisted of a web page and access to services such as the hyper system and an oracle service. Traditional lectures were usually recorded on video and synchronized with corresponding material in the hyper system. There was also an electronic workbook connected to the course, in which the students were expected to present their own and the group’s reflections. The obligatory practice system was based on problem-based learning in which group work was a requirement. The theory on group work was divided among the students and the work method was introduced by the student assistants (Andersen & Schwenche, 2001).

I wished to continue and extend previous attempts and applied for (and was granted) funding for testing “Alternative evaluation and exam formats”. What was new in this application compared to earlier attempts (Staupe, 2010) was that the exercise work could count for 100%, and the students could choose from among three teaching arrangements;

1. A 100% traditional exam;

2. 50% traditional exam – 50% exercise work;

3. A new arrangement allowed the exercise work to count for 100% of the final grade.

The background for this arrangement was that I had also previously achieved good results with traditional exams, i.e. when grading the exercises. It was quite obvious that the students put more effort into the exercises than with “approved/not approved”. All work was handed in electronically, but in order to be prepared for possible legal consequences, paper was also used. The total of all handed-in exercise work filled up 12 full 75 mm binder’s . In total, 129 students handed in exercise work, so the binders filled up almost 1 meter of my shelf space.

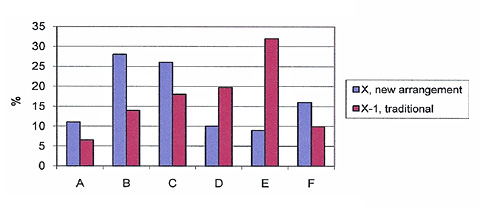

Figure 1 - Distribution of grades year X-1 and X in %. Grade A is best.

The experiment was based on a teaching arrangement I had used before; this also applied to the learning theoretical platform, and an extensive exercise part with possible solutions was developed. The exercise arrangement was comprised of the foundation stock of the exercise part of the course. In total, there were three exercise sets. Exercise set 1 was based on the first part of the syllabus and included 17 exercises. Exercise set 2 was from the middle part of the syllabus and included over 20 exercises. Exercise set 3 was the final exercise set and consisted of more than 30 exercises. All of all, this was a very extensive exercise arrangement. I chose an arrangement with exercises covering the entire syllabus instead of concentrating the exercises around a project in which one may risk using only parts of the syllabus. I wanterd to ensure that the students had to work with the entire syllabus, especially those who had dropped the traditional exam. One alternative could be to arrange three-four smaller projects covering various parts of the syllabus, or a combination of exercises and project work.

I expected the exercise work to comprise at least one-fourth of the study per semester, three-four weeks of full-time work. We expected the students to provide extensive and thoroughly written answers. They also had to search the internet for information and make use of other sources beyond merely the course material, as a traditional exam answer would not be comprehensive enough. The students were allowed to use information they had found as parts of the answer; however, they had to be very clear on where they had found the information. Many of the exercises required extensive use of the internet, e.g. when working on exercises related to computer crime, in which information was found from current sources, such as The Norwegian National Authority for Investigation and Prosecution of Economic and Environmental Crime (http://www.okokrim.no/), research by the Confederation of Norwegian Business and Industry (http://www.nho.no/ ), etc.

In addition to academic development, an important aspect of the exercise arrangement was to teach the students to search for information and to practice their writing skills. In the application for the experiment arrangement, it was emphasized that the students often had little training in writing when they reached the postgraduate level.

I also focused on writing training due to the fact that language is one of the most important tools in the learning process for exploring and trying out, and for organizing and expressing thoughts and opinions. In other words, using writing as a process to learn through, and not just for the documentation of what you know (Dysthe, Hertzberg & Hoel, 2000), and for learning how knowledge is expressed.

There is not always room for such learning within technical subjects, so Itherefore found it crucial to allow for such training when possible.

One important skill is to learn how to evaluate and verify work, both your own and other people’s work. Thus, in the exercise arrangement for exercise sets 2 and 3, there was an exercise with an evaluation of one’s own work and an evaluation of someone else’s work that was handed out.

Based on a close reading of your own exercise set x, place your work on a scale from 1 to 10, with 10 as the highest score. This is to be done for each exercise and as a final result for the entire exercise set. Your comments should be arguments for placing on the scale, and it is to be written so that we can follow your evaluation.

Correspondingly, an evaluation was to be written for an exercise answer from an unknown student, colleague/peer evaluation, and this answer was handed out electronically. Due to technical issues (“problems”), this was done only for one exercise set.

In short, the students were supposed to learn:

1. Purely academic material;

2. How to search for information within the subject;

3. Writing training:

a) Learning process;

b) Documentation;

c) Expressing oneself, formulating answers;

4. Evaluation of one’s own work;

5. Evaluation of others’ work;

6.Editing/correcting according to suggested solutions/answers, etc.

The idea behind such evaluation was two-sided; first, that the students got training in self-evaluation; second, that they were able to work on the material according to suggested solutions/answers, and not just putting things away in “a drawer”. Earlier, I had positive experiences with additional grading for each exercise part, as well as with grading the students’ work as a whole after they have been allowed to work on the subject material according to suggested answers. The main point is to learn about the subject, in relation to what you know after finishing the course and in the future. However, the students seemed to be highly focused on “being rewarded” for their work in the form of grades. Some become even more focused on the grade than on the learning process. Those two aspects should be two sides of the same coin. This is of course quite understandable considering the fact that students at universities are mostly evaluated through grades. Two important examples are admission to a Master’s program and as a scholarship holder for a PhD.

In the last exercise set, the students were supposed to perform a self-evaluation/assessment (Jonassen, 2000) before submitting their answers. The main idea was to teach the students how to evaluate own work without any guidance solution. I have done this myself on exams when having any time left. I have graded my answers and looked through to see if any parts needed more work. It was interesting to see whether the students would hand in answers that qualified for a C, or if they had placed their answers higher on the scale. For the evaluation turnout, the majority of the students clearly knew if their answers qualified for an A, B or C when handing it in, which was a completely realistic evaluation. They had to give arguments for their placing on the scale. Even so, some students thought that they qualified for an A, but the result was a C.

In the auditorium, there were big unexpected problems. The problems were technical, such as not being able to use the main canvas, and that some of the lights in the auditorium were out. The lighting was fixed, though the main canvas was never possible to use. The Norwegian University of Science and Technology waited for the new parts for the entire semester and it was impossible to switch rooms. As a result, we were never able to obtain access to a video projector/computer. The students had to learn how to use the ICT arrangement themselves, and that was not quite what I had planned. To a certain extent, the situation was saved by the fact that some of the previous lectures were available on video in the hyper system. In the hyper system there were also videos on the use of the ICT learning environment.

As it was normally for all open IT subjects, each student assistant was responsible for following up large groups of 20 students. The large groups were allotted one lesson per week, including two hours in computer labs. It was there that the students could seek advice and guidance from the course assistants. As long as there were available seats, the students decided themselves which group to follow. They could choose when it was convenient to attend computer lab lessons, and with whom they wanted to collaborate. Registration and administration were done on the course website.

Each large group was divided into work groups of one to three students. The composition of the work group was left up to the students to decide. Most students chose to work in groups of two or three, but some preferred working alone. Once the work groups had been formed, they were set throughout the semester. Submitting assignments had to be done individually; however, close collaboration was allowed within the work group, except for the evaluation part. Each student had to report who they had been working together with. If traces of similarities in answers with others than those reported were found, this would be considered as cheating. The Norwegian University of Science and Technology exam regulations, with a special focus on what is considered as cheating, was published on the website and was also repeated on several occasions. Each exercise set was to be submitted in three copies: two written in which the student got one in return with comments, and one for grading/censoring. Copy number three was submitted electronically for future archiving and for use in connection with peer assessment. The exercise sets, and eventually, though past the deadlines, the solutions, were published on the website, from where the students also downloaded the exercises.

We, the ones who were running the course, consisted of one exercise teacher, one course assistant for each large group and myself as the subject teacher. In total, we had meetings four times per semester. At least once a week, but also more often, the exercise teacher and I had a meeting. In addition, we also communicated online on an almost daily basis. The exercise teacher worked closely with the student assistants. Except during the lectures, the students should primarily have contact with their course assistant, which applied to counseling and guidance in the computer lab as well as other enquiries. Here, most enquiries were dealt with online. If the course assistant was unable to solve the problem, the enquiry was passed on to the exercise teacher and in some cases to me as the subject teacher.

In order to carry out an exercise arrangement of this magnitude, it is important to be able to avoid an arrangement in which the subject teachers receives all enquiries, etc. For me, this would have been impossible to handle. Thus, it was important to create an arrangement in which I could follow each student via the course assistants. An evaluation form was made in which the course assistants were to fill out one for each student. In addition to administrative information, the form also stated to which work group the student belonged, the names of group members, the quality of each exercise set, central points to pay attention to in each exercise set and their general impression in connection with deadlines, etc., in addition to a conclusion. For each exercise set, a detailed form with comments was filled out for single exercises plus discussion/comments connected to the exercise set and a conclusion for the set.

By September 15th, the students had to choose one of the exam formats mentioned above. Before this, they were given the first exercise set and could make up their minds about what the exercises asked for.

The registration for the different exams was as follows:

Exam format |

Number of students in % |

|

1: Exam counting 100% |

13% |

|

2: Exam 50% and |

10% |

|

3: Exercise counting 100% |

77% |

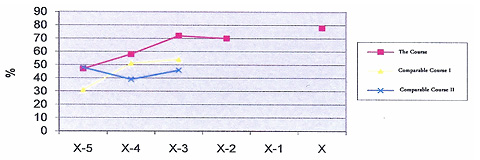

Figure 2 - Distribution of exam format

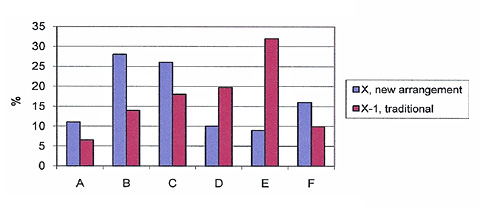

After finishing the course, the students were asked if they would have wished for a different exam format than the one they chose. The result was as follows:

Figure 3 - If one could choose again

We see that of those who would choose differently, which was 16% in total, the biggest group of these (6%) would choose that the exam should count for 100%. The main reason for this is probably that some complained about the exercise part being too demanding. No one stated that they would go from 100% exam to 50/50% or 100% exercise. I got the impression that many of those who chose 100% exam did so due to the work situation. In total, we see that there was a bigger desire for the exam, rather than for more exercises, though the difference was small. The completion percentage achieved a record high of almost 80% compared to the initial registration for the course.

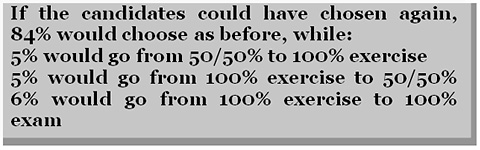

Figure 4 - % with final grade according to the number registered for the course, course completion. X-1 is not possible to obtain.

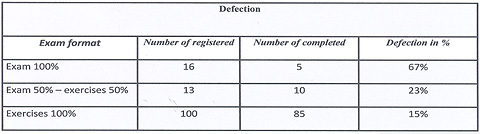

Earlier, I checked against other IT subjects to see whether there might have been factors other than the test arrangement that affected the result. Two typical IT subjects are presented as control subjects; basically, the IT subjects followed the control subjects. For the years X-1 and X-2, it has not been possible to track down the number of students registered for this course, but only those who registered for the exam. For year X, I have saved the number of students registered for the course myself, but the Norwegian University of Science and Technology does not have numbers for the other courses. As we can see, the completion percentage for the course was almost 80% for year X. The defection varied a lot between the three exam formats.

Figure 5 Defection within the different exam formats

We read from the table that the highest defection is to be found among those who chose a 100% exam arrangement, which was 67%. It may seem that their courage failed and that they felt unprepared. The defection is much lower for group 50%-50% at “only” 23%, this despite taking the same exam as those with a 100% exam arrangement. It seems as if working with exercises produces confidence. The students who choice 100% exam had the same possibility to send in exercises as those with 100 % exercises and those with 50/50%, but the research indicated that very few had done this. Those with 100% exercises had only a 15% defection rate.

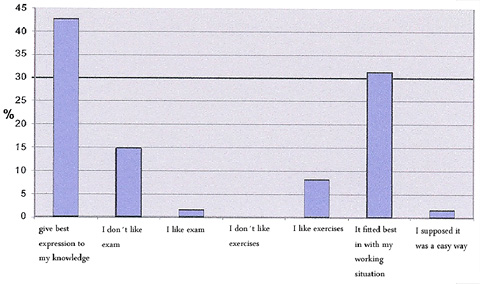

Students were asked why they chosen as they had done. The answers were:

Figure 6 [MS1] - Reason for choice of exam form

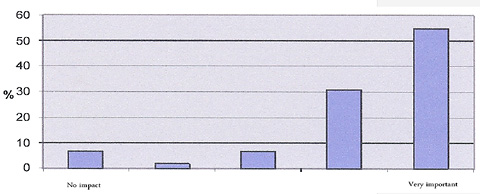

Students were asked about the importance of choosing an exam form. Their answers were:

Figure 7 - The importance to choice exam form

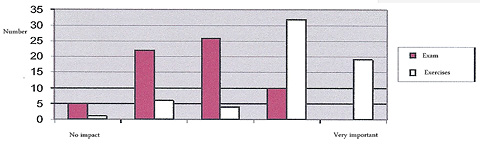

Students were asked how important the exam and the exercises were in giving expression to their knowledge. Their answers were:

Figure 8 - Number of students who thought that the exam or exercise work gave the best expression for their knowledge

It is quite obvious that most students felt that exercise work expressed their acquired knowledge much better than exams. No one seems to have thought that exams expressed their knowledge very well, while almost 20, i.e. about one-third, stated that exercise work did.

One of the things that required a lot of surveillance was trying to avoid copy theft, or what we call “boiling”, i.e. using sources without references, etc. Here, we put a lot of effort into conducting research on potential sources, such as websites in which the students could possibly find and copy material. Eventually, the course staff became very familiar with those potential sources and what material existed within the subject.

Unfortunately, some were tempted and made use of sources that they did not refer to, and some of these failed the course. We had to make sure that we acquired sufficient information on the topical sources in case of possible complaints, and perhaps we should have been even stricter. This should be discussed and reconsidered at a later point if something like this happens again. If “boiling” cannot be handled properly, such a teaching arrangement is not the way to go. I suggested to the department/the faculty that we needed to develop software tools for such purposes that are possible to realize.

Evaluation/assessment (Jonassen, 2000) of one’s own and others’ exercises was probably the most unfamiliar part. The students expressed that they were tired after submitting the exercise and did not wish to see it again. The self-assessment often seemed meaningless and was completed quickly since one was sick and tired of the exercise after having working on it “for soooooooo long”. In one comment, someone claimed that this kind of teaching arrangement was purely unethical, especially the peer-assessment. But most students had put effort into this and understood the purpose; working with the material as part of an evaluation process and seeing oneself in relation to a colleague, i.e.the “seventh intelligence”, self-knowledge, Daniel Goleman (1995), knowing/understanding oneself – strengths and weaknesses. The students solved this part of the exercise quite differently. Here are a couple of examples, the grade scale ranges from 1 to 10, with 10 as the highest value . Assessment of one’s own exercise solutions:

12. The answer is given a grade of 9. Perhaps there should have been a clarification of which parts a process consists of, and what the relationship between the process and the operating system really is.

13. The answer is given a grade of 9. The race condition is described by the use of an example.

14. The answer is given a grade of 10. The answer is relatively long, but it provides a thorough explanation of the different ways of avoiding the race condition.

15. The answer is given a grade of 5. The explanation is a bit vague. A more thorough explanation of how semaphores really work should have been provided.

16. The answer is given a grade of 10. The answer is fully satisfactory.

17. The answer is given a grade of 7. The presentation of the batch system is partially wrong since I claim that batch systems do not apply to multi-programming. The truth is that the processes are allowed to run for a long time, but not until they are finished. The rest of the answer is quite similar to the solution.

It was very difficult to evaluate my own answer since I easily spot many mistakes in my own material. I had set a goal before I started working on the exercise and I have not quite reached as far as I wished to. However, I also see my answer as compared to the answer that was handed out, in which my answer is clearly much better when it comes to language and context, even though my answer emphasizes the big picture instead of details. The total grade for my answer is 9 (median), while the arithmetic mean is 8.8.

EXERCISE 1

Grade: 9

Reason: I have now chosen 100% exercise and no exam. First I was a bit uncertain if I would end up learning less then when not reading for an exam. However, I have proven the opposite. Since the exercises count for the final grade, you obviously want to do your best. And I have put a lot of effort into this exercise, which in turn has lead to new knowledge for me. This does not only apply to the things asked for in the exercises, but also to the knowledge one needs to answer the questions as best as possible. I have provided such thorough answers for each question that you just have to learn something from it. Both my curiosity and my fascination for those different things have made me really eager to learn. I started early on the exercise in order to have control and enough time to finish the exercise early. I have not had the chance to collaborate with anyone; perhaps it would have been a good idea to discuss some of the issues with fellow students to obtain even better answers. So this is perhaps a negative side of my answer.

Neither have I received help from student assistants; this is something I need to become better at since this is something that would probably improve my answers. The reason for not giving myself the lowest grades on the exercise is that, through following lectures and reading a lot, I have worked hard with each and every question and therefore I do not deserve getting the lowest grades here. But all in all, I have learned a lot about a lot. So I think this is a very interesting way of working.

This is the end of the self-assessment for the first exercise set (all the exercises from 1-17 have been evaluated). This student is obviously goal-oriented and it does not come as a surprise that this student ended up getting a straight A in the course.

Peer-assessment, example, exercise set 1, tasks 12-17.

Task 12, Grade: 6 - Here I would have included more and answered a bit differently, but the answer is ok.

Task 13, Grade: 7 - Here I think my answer was a bit short, should have included more.

Task 14, Grade: 9 - Good answer, providing explanation of what happens.

Task 15, Grade: 8 - Good answer, here the most important things are included.

Task 16, Grade: 7 - Short and quite good answer to the question, could perhaps have elaborated a bit more.

Task 17 Grade: 10 - Very good and clear, nice descriptions.

Exercise 1, Grade: 7 This grade is based on the fact that I think some answers should have been more thoroughly written. Also, I miss examples in some of the answers that could have been very informative. However, the answers are correct; I just think that some of the answers should have been longer, so I eventually ended up giving this grade.

Many answers failed since some of the students answered as they would on the exam. Even though the answers were correct, they lacked a deeper aspect, and they have not been thoroughly worked on and there were no examples. I think the peer-assessment above shows this quite clearly.

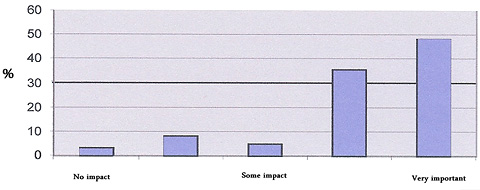

Figure 9 - Using exercise work to build own learning

Students were asked about how important exercise work is for their learning. Most students, 87%, chose to work with exercises. We see that almost 50% think that “using exercise work contributes a lot to one’s own learning”, and 35% are close to such an evaluation. This means that almost 85% see exercise work as very important to their learning. Only 2-3% stated that exercise work is not important though, and if we include those bordering to these, we find that 11% see exercises as less important. Five percent are in a grey area.

Previous research (Staupe, 2010) has shown that those who do not choose exercises get a grade of approximately 1 , according to the old grading system (i.e. a better grade, as 1 is the upper limit and 6 is the lower limitweak) for the exam than those who choose an exercise arrangement with grades.

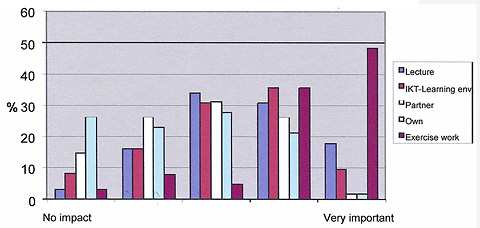

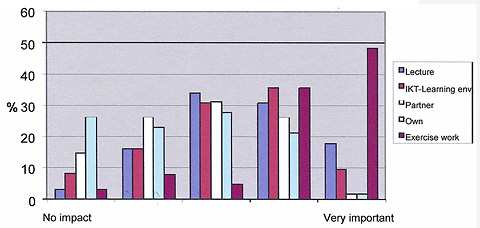

Figure 10[MS2] - building one’s own learning, an overview

If we calculate the average for what the students feel contribute to building one’s own learning and turn it into grades by letter we get:

- Exercises; a solid A

- Lectures; a solid B

- ICT learning environment; a solid B

- Peer-assessment; C

- Self/own-assessment; C

However, we are not looking for an “either/or” focus, but rather being able to offer a learning environment where several different teaching arrangements are being used. The ultimate arrangement would allow the students choose within a given selection, see figure 7 as an example showing how important the students think it is to be allowed to choose exam-/evaluation format.

Figure 11 - How many lectures the students wanted

The students were asked how many lectures they preferred, ranging from none to the number of given lectures during the course. The question was based on the fact that they had access to a good learning environment. More than 70% preferred the number of lectures given during the course. The rest of the students’ answers belong in Groups 2 and 3. This means that barely 30% would wish to reduce the number of lectures to two – three per semester. It might be possible to arrange the lectures to meet both wishes: one lecture at the beginning, one in the middle of the semester and one summarizing at the end. Between these, there would be “regular” lectures. No one ticked off the box saying that they did not want any lectures. It is hard to spot a relief in the work load for the subject teacher or an economic benefit, despite having access to a good ICT learning environment. However, it was decided to alter the arrangement to a certain degree in order to meet more wishes/needs and to be able to offer a better learning environment in general. Experience shows that students who have not been able to follow lectures in the auditorium have done well. This also applies to those students following video lectures on the Internet.

The best thing would be to give feedback in the form of grades during the year; however, this was not possible to combine with the arrangement we had with an external examiner. Bringing in an external examiner several times during the semester was unrealistic. When using the grades “approved”/“not approved”, clear doubts could be reconsidered by the subject teacher, the rest could be taken care of by the course assistants. However, some students complained about not getting a grade for each evaluation. It is understandable that it would be motivating to know at all times which level one had reached. But this would also be quite a commitment to the subject teacher throughout the semester. Perhaps, it would be possible if we use multiple-choice tests/ multiple-choice tasks.

Even with skilled course assistants I cannot see how the grades would be set without involving the subject teacher. Extending the academic year also makes it difficult for the subject teacher to participate in activities outside the course. One is expected to do research with collaboration across borders, to participate and preferably lecture at seminars and courses here and there, etc. If there is supposed to be an additional responsibility for grading with set deadlines at several points during the semester, there might be too little room and time to participate in activities outside the course.

Also, with grading comes some supplementary work, and thanks to a well-organized arrangement it was possible to carry out. Since this was the first time, it took a while before the examination results were ready. Some students did not show an understanding for this. With experience, we might have spent two weeks less on the examination. However, the examination results were ready at the set deadline. As a basis, we, the external examiner and the subject teacher, had access to all comments given to the students when returning the answers, when going through all exercise sets in detail, exercise by exercise and a total assessment. This material was invaluable when going through the exercise sets.

The students could choose between three different formats of evaluation, traditional exam, 50/50% traditional exam/exercise work or 100% exercise work. Experiences from the course prove that the exercise conditions and the exercise arrangement seem to be more decisive for the quality of the results of the studies than the lectures, e.g., the defection drop from over 50% before testing alternative exam/evaluation forms to 21% after. The defection by percentage for each of these three groups: exam 100% was 67, 50/50% was 23 and for exercises 100% was the defection only 15%.

Also, we notice that good exercise conditions, both when it comes to accessing computer equipment and exercise assistance, seem to be of the most importance to obtain good results.

The majority of the students expressed that an arrangement requiring an effort to obtain a respectable mark, and not only “passed/failed” on the exercise work, yields better professional results and knowledge than with a traditional arrangement with exam 100%. There were two main reasons for selecting exam form: Give the best expression to their knowledge (43%) and it fit best with their working situation (31%). Some (15%) answered that they disliked the traditional exam form. Only 2% answered that they liked the exam.

The majority of the students, 85%, expressed that it is important or very important to have a possibility to choose the exam form. Only 7% said it had no impact. Students were also asked how important it was in terms of the exam and exercises giving expression to their knowledge. They thought that exams have a low expression as opposed to exercises that the students considered to have a high expression.

If we calculate the average for what the students feel contributed to building one’s own learning and turn it into grades we get: Exercises; a solid A, Lectures: a solid B, ICT learning environment: a solid B, Peer-assessment: aC and Self/own-assessment, a C.

One of the things that required the most surveillance was to avoid copy theft , or what we call “boiling”, i.e. using sources without references, etc. If “boiling” cannot be handled properly, it will be a very big problem to evaluate exercises and give marks. We need to develop powerful software for such objectives.

Albinussen, T. (2003); Navigering i internett og hypermedia, NTNU/IDI, Master’s Thesis.

Andersen S. A., Schwenche E. (2001) ”Prosjektarbeid en veiledning for studenter” Bekkestua: NKI forlaget.

Bjørke, G (1996) ”Problembasert læring – ein praksisnær studiemodell”. Oslo: Tano – Aschehoug.

Dysthe, O., Hertzberg, F., Hoel Løkensgard, T. (2000) ”Skrive for å lære Skriving i høyere utdanning” Oslo: Abstrakt forlag.

Golemans, D (1995) “Emotional Intelligence” New York: Bantam Books.

Jonassen, David H (2000) ”Computers as Mindtools for Schools Engaging Critical Thinking” Upper Saddle River, N.J. Merrill Prentice Hall.

Larsen, S. (1995) ”Man kan ikke lære nogen noget – mod et nyt læringsbegreb” In: Christensen, A. (ed): Individualisering og demokratisering af læreprocesser – muligheder og betingelser. København SEL, 1995.

Lie S, Thowsen, I., Dahle, G. (red) (2000) “Fagskriving som dialog” Oslo, Gyldendal Akademisk.

Staupe, A. (2010); “Experiences from Blended Learning, Net-based Learning and Mind Tools” Seminar Net ( www.seminar.net) http://seminar.net/images/stories/vol6-issue3/Staupe_Experiences_from_Blended_Learning.pdf (retrieved 20.6.2011)

Warholm, H-O (2000); Hypermedia i undervisningen, NTNU/IDI, Master’s Thesis.

[MS1]The last two to the right should read: “It fit best with my working situation” and “I suppose it was an easy way”

[MS2]I assume it should be ICT in the box, not IKT.