Senior Lecturer

Faculty of agriculture and information technology

Nord-Trøndelag University College

E-mail: hugo.nordseth@hint.no

Assistant professor

Faculty of agriculture and information technology

Nord-Trøndelag University College

E-mail: sonja.ekker@hint.no

Assistant professor

Faculty of agriculture and information technology

Nord-Trøndelag University College

E-mail: robin.munkvold@hint.no

Our exploration of peer assessment in the formative feedback of themes within ITL111 Digital Competence for teachers (15 ECTS) and GEO102 Physical Geography (15 ECTS) is based on support from tools within the LMS, sets of learning based outcomes, rubrics and Six Thinking Hats. The overall effect is improved quality of the student assignments and deeper learning. The best results were registered with the use of rubrics where the students were presented with clearly defined criteria for expected performance on a sample of different themes within the course. In order to perform the peer review, the students had to acquire the basic knowledge of the various themes. In addition, seeing how others solved the assignment provided the student with reflections on the themes that would improve the student's own final portfolio.

Keywords: Peer Assessment, Intended Learning Outcomes, Learning Management System, Rubrics, Portfolio, Six Thinking Hats, Deeper Learning

Peer assessment has relevance for teaching and learning in different contexts, specifically related to the use of portfolios and guidance for students preliminary work on a portfolio. A search for the keyword peer assessment in Norwegian web-sites shows a lot of activity in the period 2004-2005, but not much afterwards. Peer assessment was developed as a basic didactic tool for the health care study program at Oslo University College (Havnes, 2005). Walker (2004) has published a paper on learning to give and receive relevant critique using peer assessment. Peer assessment is used in study programs at several university colleges and universities in Norway. A report of international trends in assessment (Dysthe and Engelsen, 2009) concludes that self assessment and peer assessment will replace assessment by the teacher or the external grader.

The goals of the project ASSESS-2010 are to investigate how to make cost-efficient assessments in the learning process for students in courses with many students. A lot of courses are still working with traditional teaching and with limited support for the learning process before the final exam. In this context, we wish to gather empirical evidence on adequate tools for learning activities in an e-learning environment using principles for deeper learning. Carmean and Haefner (2002) synthesized various theories on learning into five core deeper learning principles: social, active, contextual, engaging and student-owned. Deeper learning occurs through these five principles, but they don’t have to be present all the time nor all at once (ibid).

Our experience from this project is based on peer assessment in two pilot courses at Nord-Trøndelag University College. The feedback on student reports before the exam portfolio delivery was mainly based on the peer assessment method. We introduced several tools to improve the quality of the peer assessment process. First, the peer assessment was connected to intended learning outcomes (Biggs and Tang, 2007). Second, we applied a set of criteria the students had to follow when they were giving feedback to each other, using a rubrics tool based on ideas from Fjørtoft (2009). In addition, we introduced the Six Thinking Hats as a tool for making relevant and constructive feedback (De Bono, 2010).

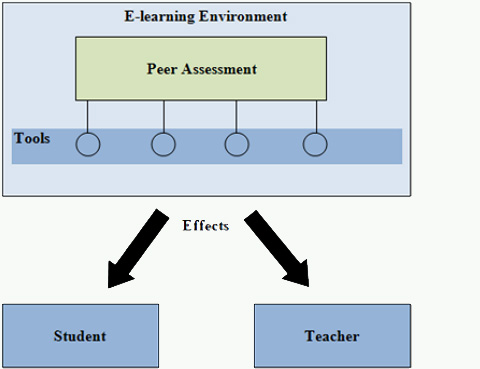

In this paper we present our experiences with peer assessment in an e-learning environment. The research question is defined as follows: How can tools like learning objectives, rubrics, Six Thinking Hats and discussion forums within the LMS promote better quality for the student assignments and make the students achieve deeper learning? Our research model is presented in illustration one.

Figure 1: The research model.

The pilot courses use web-based learning for a mixture of both on-campus and distance students. Mainly, the teaching activities include web-based learning with both lessons and feedback on student assignments. In addition, the distance students have an on-campus gathering with full time teaching for three days in the beginning of the semester, and a second gathering midway in the semester. The fulltime students on campus follow regular two-hour lectures per week. The final exam is a portfolio consisting of the best of the previously submitted assignments. Both pilot courses have on the average 60 students. Some of the student assignments are organized as group activities, which give the student the opportunity to build relations with other students that are participating in the courses.

The main reason for choosing ITL111 Digital competence for teachers and GEO102 Physical Geography as pilot courses was that they have more students compared to the other courses at our university college. Another reason was that the courses are delivered with a wide use of e-learning environments for teaching and learning purposes.

Our research process is based on the use of selected tools for peer assessment. Earlier researches discuss the terms peer assessment and peer feedback. Liu and Carless (2006) explained the difference between the two, where peer feedback primarily is about rich detailed comments but without formal grades, peer assessment denotes grading. In our cases the task was to give feedback on three other student assignments, and we also provided tools for measuring the student assignments by giving a mark on each requirement in the rubrics.

Our university college uses Fronter as the learning management system (LMS). The challenge in connection with our research project is that Fronter lacks specific tools for peer assessment, and we had to explore alternative ways of managing peer assessment in this environment. We prepared a specific archive or "room" within Fronter for each group consisting of 3-5 students. The students had write access to this archive, and we recommended them to establish a discussion forum as a method for feedback in the peer assessment process.

In the last of four student assignments of the course ITL111 Digital Competence for Teachers (15 ECTS), the task was to produce a set of multiple-choice questions for their own class in primary or secondary school. During the peer assessment process, the students wrote a report where they presented and explained the introduction to the multiple-choice test in addition to six of the questions from the multiple-choice test and the marking policy. The report was posted in the dedicated archive in Fronter where a group of 3-5 students had access. Each student reviewed the report from the other students in the group based on the following tools for peer assessment: the intended learning outcomes, rubrics and feedback based on the Six Thinking Hats. The second part of the student task was to write an individual reflection report where the students presented their own feedback and reflections on the peer assessment process. The students were to consider and reflect on the learning effects of using the method of peer assessment. The instruction for individual reflection report was to present:

Your evaluation and feedback to three of the other student assignments.

Your reflection on the use of intended learning outcomes and the rubrics in the peer assessment process - consider it both from a student and a teacher perspective. What are the possibilities and what are the limitations of these tools.

These individual reports of feedback and reflections were delivered in a portfolio archive for student reports and teacher feedback in Fronter. Based on the feedback from fellow students, each of the students could improve their preliminary portfolios before delivering their final portfolio. The final portfolio was evaluated by an external grader of the course in addition to the teacher.

In the course GEO102 Physical Geography (15 ECTS) there are three mandatory student assignments. The instructor gave each student feedback on two of these assignments, and the peer assessment method was used as the feedback in the third assignment. The class was divided into groups with about 4 students in each group. Each student read through the papers of the other group members and gave feedback on their work. The tools for the student feedback were the relevant intended learning outcomes in the course, a set of criteria presented in a rubrics with guidelines for different marks, and in addition a simplified version of the Six Thinking Hats. The rubrics with guidelines for different marks were presented since the students were expected to set a preliminary mark on the assignments of the other students. In terms of "the thinking hats", the students were instructed to give feedback on some positive aspects, some negative aspects, and provide some suggestions for improvements of the fellow student's assignment. The completed evaluation ought to be about one half A4 page. In the reflection report the students were required to submit later in the class, the students were asked to write a reflection report where they answered some questions:

Did the assessment tools help in the process of evaluating the other students´ assignments?

Were you inspired to write a more thorough paper knowing that other students would be reviewing the work in addition to the instructor? Do you think the feedback given by the other students in you group helped you in the process of improving your portfolio? Did the process of peer review assignments make you learn the subject more effectively? Did you get helpful tips by reading the other students´ assignments?

The research can be categorized as a case study of two different applications of the peer assessment method. Case studies are conducted in situations where the phenomena are studied in their natural context. In this situation, the research is based on several data sources to ensure the most thorough and detailed investigation (Askheim and Grenness, 2008). Our empirical data are based on the students' reflection reports and activities in the Fronter archives of the two courses.

Our approach has two primary theoretical aspects. First, our case study of peer assessment is based on support from relevant tools in the e-learning environment, intended learning outcomes, rubrics and the Six Thinking Hats for evaluation. What is the adequate use of these tools for peer assessment, and what is useful in our context? Second, we want to investigate the perceived learning effects of the peer assessment process for our cases. What are the perceived effects of using these tools in peer assessment for the teacher and the learners?

An important goal for the project ASSESS-2010 was to get experience from alternative learning theories that are supporting portfolio teaching style. The method developed is based on the idea of "Constructive alignment" (Biggs & Tang, 2007) and is an educational framework to see the connections between what students are expected to learn (intended learning outcomes), evaluation and the learning process. Process evaluation is part of the learning process leading up to the students to reach their goals for learning. Process evaluation is a formative assessment of students during the learning process and will provide feedback to students about how they are performing in relation to the goals for learning. It is thought that feedback contributes to increased motivation in learning the course (ASSESS-2010).

The student assignment on multiple-choice and feedback - by the process of using peer-assessment - had three learning outcomes:

After working with the lesson of creating multiple choice questions you should be able to

make a criteria based tool for assessment of a given theme/goal

give constructive feedback to fellow students

evaluate suitability of the assessment method and student assessment

The assignment used for the peer review in Physical Geography was on the topic of quaternary deposits and landscape formation. The learning outcomes for this part of the curriculum were defined as follows:

describe the quaternary deposits in Norway

describe how they are formed, where they are deposited and the landscape formation

The website Assessment is for Learning (2010) has a glossary defining the concept rubrics:

A set of graded criteria, often in the form of a grid, which describes the essential quality indicators of a piece of work or product, in order for it to be accurately assessed. Rubrics provide learners with learning intentions and success criteria, and can also be useful for encouraging interactive dialogue about quality. For example, they can demonstrate the key features at a variety of levels, which indicate to learners what would be a poor, an average and a very good piece of work. They can be used before, during and after activities, and can be helpful in providing learners with quality feedback as well as enabling opportunities for self and peer assessment.

Fjørtoft (2009) has presented a number of rubrics to improve assessment practices for a lot of subjects in Norwegian schools. Based on his ideas we made a specific rubrics for defining learning outcomes, by placing marks from A (best) to E (poor) for each requirement and assessment goal. For assessment of the assignment on multiple-choice, six points of requirements were presented in the curriculum (table one). We introduced requirements for making a good introduction, feedback strategy, and skills for making multiple-choice questions.

The students used Fronter or It's learning for making their multiple-choice tests. The tools for making multiple-choice questions have several options for making questions, like radio bar, multiple check-boxes, yes/no-arrow, drop down list, short text, long text, click-on-screen, and connect from two sets of statements. There was also a requirement for using stimuli in the questions, like a picture, a web-address, a map, an animation, a figure, or an illustration. We also introduced requirements connected to a new version of Blooms taxonomy for making questions that cover different aspect of learning: remembering, understanding, applying, analyzing, evaluating, and creating (Overbaugh and Schultz, 2010).

|

Requirement/ |

A |

B |

C |

D |

E |

|

The introduction |

All claims |

All claims |

Some requirements |

Short intro |

Thin intro |

|

Sort of questions |

4 types |

3 types |

2 types |

Single type |

Single type |

|

The use of stimuli |

Different types |

Different types |

Minimal |

Only questions |

Only questions |

|

Blooms taxonomy |

> 3 + |

3 + |

2 + based |

1 + justified |

Unfounded |

|

Scores -feedback |

Differentiated |

Differentiated |

Single |

Single |

Simple and |

|

Total valuation |

Advanced and superb fit the target audience |

Very good and fit the target audience |

Good and adapted to the target audience |

Easily |

Simple minimum solution |

Table 1: Rubrics with requirements for the assessment on the multiple-choice assignment.

Table 2 shows the set of requirements for the A - E marks to be given to the students’ works in Physical Geography. The table gave the students a common platform they could use to set a preliminary mark to the fellow student. The table also made the students conscious of what is needed in order to get a good mark on a student assignment.

|

|

A |

B |

C |

D |

E |

|

References in text |

OK |

OK |

Mostly OK |

Missing something |

Deficient |

|

Reference list |

OK |

OK |

Mostly OK |

Missing something |

Deficient |

|

Concepts |

Many correctly |

Many correctly |

Taken with the most common |

Must have some |

Little |

|

Content |

Master the curriculum + additional info as well |

Master the curriculum |

Key points |

Missing a part, some errors |

Lacking a lot |

Table 2: Rubrics with requirements for preliminary marks given to student work in Physical Geography.

"Six Thinking Hats" is a technique that helps us look at project ideas from a number of different perspectives (De Bono, 2010). It is also defined as a thinking tool for group discussion and individual thinking. It helps us make better decisions by pushing us to move outside our habitual ways of thinking. The technique is mainly developed for collaboration groups, in order to make the participants think more easily together. This way of organizing feedback within collaboration groups has a psychological effect, because the participants are more aware of the roles that they are playing, and therefore will not be afraid to give both positive and negative feedback to the other participants’ work. De Bono defines the six thinking hats like this:

White hat: Calls for information known or needed: This means that the participants give feedback on their understanding of the subject and input on known and needed information regarding the theme.

Red hat: Signifies feelings, hunches and intuition: This means that the participants give feedback on their feelings, hunches and intuition towards the subject being presented.

Black hat: Judgment -- the devil's advocate or why something may not work: This means that the participants give feedback on things regarding the subject on which they think will fail. In general: looking for problems and mismatches.

Yellow hat: Symbolizes brightness and optimism: This means that the participants give feedback on what they like regarding the given theme and what positive (side) effects they assume the idea will bring.

Green hat: Focuses on creativity: the possibilities, alternatives and new ideas: This means that the participants give feedback on what they mean are the possibilities regarding the subject and on new ideas they might have that might bring the project further.

Blue hat: Is used to manage the thinking process: This means that the Project Owner tries to summarize the process this far and gives the participants the opportunity to give feedback on the big picture of the theme and on the content of what has been discussed.

Introducing peer assessment in a course has effects both on the learner and the teacher. The conceptual rationale for peer assessment and peer feedback is that it enables the students to take an active role in the management of their own learning (Liu and Carless, 2006). The main argument here is that the learners monitor their work using internal and external feedback as catalysts. Falchikov (2001) argues that peer feedback enhances student learning as students are actively engaged in articulating the evolving understanding of the subject matter. Liu and Carless (2006) give some other relevant arguments. First, the learner would receive a greater amount of feedback from peers, and it happens quicker than when the feedback comes from the teacher. Second, the learning is to be extended from the private and individual domain to a more public domain. We learn more and deeper through expressing and articulating to others what we know or understand.

Brown et al (1997) argue that the resistance by students to informal peer feedback is rare. The resistance to formal peer assessment for summative purposes is frequently based on three reasons: dislike of judging peers in ways that 'count'; a distrust of the process and the time involved. In our cases we, therefore, focused on the informal peer feedback and applied the tools in the assessment of a preliminary portfolio instead of the final portfolio.

Liu and Carless (2006) also mention some possible reasons for resistance to peer assessment:

Reliability. This is connected to grading and marking. In our project the student just made marks at the preliminary student assignment.

Perceived expertise. Academics are expected to have far more expertise than students. Some students may feel that their classmates are not qualified to provide insightful feedback, whilst other may find it easier emotionally to accept feedback from peers.

Power relations. To assess is to have power over a person and sharing the assessment with student leads to sharing of teacher's power. In our project most of the students are teachers or in a teacher study program, and it should be important to practice on giving feedback and assessment.

Time. Peer assessment demands spending more time spent on tasks like thinking, analyzing, comparing, and communicating.

From the teacher perspective an earlier research showed that using self- and peer marking on a mid-term portfolio both saved staff time and enabled students to receive feedback more promptly (Boud and Holmes, 1995). With increasing resource constraints and decreasing capacity of academics to provide sufficient feedback, peer feedback can be a central part of the learning process (Liu and Carless, 2006). The teacher perspective also deals with:

Reliability. Are students making correct marks during the peer process? How can we assure that the mark and the feedback are relevant?

Perceive expertise. A key solution is to give the students relevant tools that they can apply in the peer assessment process.

Time. When introducing peer assessment the teacher spend more time scheduling and planning rather than teaching and coaching.

When planning the peer assessment assignments, we had to deal with some challenges concerning the aspects of supporting the students in the learning process and to give support in order to avoid problems with resistance against the assignment. Table 3 presents an overview of the main aspects. It shows the challenges a teacher encounters in the project of using peer assessment. These questions also give the structure for reporting the results and the empirical findings in this paper.

|

Aspect |

Teachers challenge |

Learners feedback or results |

|

Reliability |

How do we manage reliability? Is there any resistance against giving marks? |

Empirical feedback on reliability? |

|

Perceived expertise |

How do we support insecure students with lack of competence and work progress? Are the tools of the rubrics, the intended learning outcome and the Six Thinking Hats useful for the student assignment? |

Empirical feedback on perceive expertise? |

|

Time spent on the peer assessment process |

Do teachers spend more or less time administering the course with a peer assessment assignment and process? |

Empirical feedback on time consumed in the peer assessment process |

|

Quality of the student reports |

Do the students get deeper learning using the peer assessment process? |

Compare the quality of students assignments |

|

Support from the e-learning environment |

Does the e-learning environment have relevant tools for the peer assessment process? |

Empirical feedback on the support from the e-learning environment |

Table 3: Challenges on planning and duration of peer assessment in our projects.

In this chapter we present ways of dealing with the challenges presented in table 3, and some empirical findings from the students’ reports, e-mails and results from the learning process of using peer assessment.

We made two main goals for the control of the feedback process on the student report from the multiple-choice test. First, we had to control that every student received feedback from at least two other students. Second, we had to control that each student received feedback with proposals for improvements. The students documented their feedback process through the individual reflection reports of this process.

Through this quality control we found that two students did not receive feedback at all, and we had to provide our own feedback to these students. This teacher feedback was given in the forum for peer assessment. And every student received relevant proposals for improvements.

Two of nine groups decided not to give marks in the peer assessment of the student report. The students here are all teachers as occupation or teacher students, and should be skilled in giving marks. This observation indicates some resistance against giving marks in peer assessment.

The rubrics were an important incentive for the students when they developed a multiple-choice test, and also for the later work on the report on the peer assessment process. As one of the students said: "Knowing that other students will have access to my report gives me motivation to perform better."

The rubrics were first used when the students made the multiple-choice test. It was important to make questions and a test that fitted the requirements for a good mark. The next use of the rubrics was when the students made their report from the multiple-choice test and the peer assessment process. They wrote the report, chose questions and argued for their decisions that would fit the requirements for a later peer assessment. And they had to learn a lot about the specific requirements for making their own report.

During the peer assessment process, they had to evaluate and give feedback on the other student reports. This process required the student to have good knowledge about the reports’ topic so he could give advice for improvements to the other students. As the students said:

A great advantage is that one has to dig more deeply into the theme because one has to do peer feedback ", "Assessing other students works makes me better prepared for assessing my own report" and "It has made me more aware regarding the demands for assessing the assignment.

It’s learning and Fronter have different options and solutions for making multiple-choice tests. Our students used either It’s learning or Fronter for their student work at this topic. By using peer assessment with users from different LMS, the students got ideas and new perspectives on how to make multiple-choice tests from each other.

The feedback process also includes tools for how to give adequate feedback. We gave no such tools in the introduction, and some of the students made their own rubrics for giving feedback based on the given requirements. Table 4 shows an example on how to give feedback.

Based on the feedback given in the peer assessment process, the students were allowed to improve their own reports before posting it to the final portfolio. As part of this process they also had to find the adequate feedback. For example, when they got three different feedbacks from the peer students with incompatible advices for improvements, the students had to decide which one was the most adequate for their own assignment and report. This is illustrated by the following statement from the participants:

The advantage of giving peer feedback in an open forum is that one receives a variety of feedback and in many cases different students provide different feedback even though they have the same criteria/rubrics to follow.

|

Requirements for feedback |

Marks and evaluations based on the requirement |

Suggestions for improvements |

|

1: Introduction to the test |

Mark B: Good and adequate introduction to the test, but not complete. |

Supply more information to the pupils. What is the max score? What type of questions will they find in the test? |

|

2: Type of question |

Mark B: There are 3 different types of questions in a proper way. |

You also can use a question with drop down list for this test? |

|

3: Use of stimuli |

Mark C: You have good pictures for some of the questions. |

Make a better connection between the picture stimuli and the question. |

|

4: Use of Bloom's taxonomy |

Mark B: You have questions in level-4 at Blooms taxonomy. |

No suggestions! |

|

5: Score and feedback |

Mark C: You have just a final score as feedback |

Discuss the use of negative points for wrong answers. |

|

6: Total valuation |

Mark B/C: You have a well performed test and report |

Work with the introduction and apply more and better stimuli |

Table 4: Example of student feedback based on the rubrics.

Another source of improvement was the ideas that were given in the peer assessment of the reports. Could good solutions found in someone elses report improve your own test and report in a way that gives you a better mark? Copying questions from each other was not easy, and directly adequate, because of the concept of individual goals for the multiple-choice test. At least they can use similar alternative questions or tools when they make their next multiple-choice test.

The table, which defined the requirements for the student assignments, also provided instructions for an individual note for feedback and reflection. The first part of this note was concerned with the documentation of the feedback the student gave in the peer assessment process. Most of the students made adequate evaluations, proposals and feedback based on the given requirements for the multiple-choice test and report. Some of them came up with a clear structure for feedback like in table 4.

The reflecting part of one of the questions was to give opinions about using a table of requirements for different marks. Most students reported that the use of the rubrics had been very useful. They felt that it made the process on peer feedback much easier, more accurate, more fair, systematic, and constructive. They also pointed out that it was important to have an open space where they could freely write comments and suggestions on their peer student report. The rubrics seems to make the students focus quite well on each detail, as they comment the peer student reports with a narrow focus on each little element (criteria) within each rubric, making them miss out on the overall picture. Our use of the criteria "overall impression" is therefore evaluated very positively in the empirical data that are collected from the reflections done by each student in the process of this work

A great challenge is to make the content of the rubrics as clear as possible so that each peer feedback is done as fair as possible. Accordingly, the students also pointed out the importance of our classroom walkthrough. It gave everyone a better understanding of the meaning of each element in the rubrics and on how they were to use it when they gave constructive feedback to each other.

There are a few concrete comments on this tool in the reflecting notes from the students. We continued to work on it in a research on how to make intended learning outcome in another course, in the spring of 2010.

The principles of the Six Thinking Hats were first presented to the students. Then they were divided into several groups where they were given a small assignment to practice on how to use the tool for peer feedback in later assignments. Through this exercise the students were made aware of the possible use of the Six Thinking Hats as a tool for making constructive peer feedback on the given assignment. Even though several students reported that they used the tool, only a few commented directly on its effect. One of the comments from the students who took the course was:

Using Six Thinking Hats made it easier to give peer feedback. The negative feedback becomes less personal as the peer can reflect that “this is said when wearing the black hat, …” and so forth.

When discussing the tools and their possible effects on making constructive peer feedback, several students pointed out the psychological effects of the method. The tools made them less uncertain when giving peer feedback.

The criteria for peer assessment in Physical Geography have three components: the students were asked to comment on the positive aspects, on the negative aspects, and to suggest improvements to the student work submitted in a feedback report of about a half A4 text page. The purpose of the criteria was to ensure uniform feedback to the students. In addition, it was necessary to demand of the students to be critical in their reviews of their fellow students so that errors and weak points of the work submitted would be clearly visible. The requirement to give negative comments in addition to the positive feedback made this evaluation more legitimate, and acceptable, in the cases where the students knew each other well.

The student work with multiple-choice assignments and a student report was done in both 2008 and 2009. In 2008 the students only received a teacher feedback on the first version of their work in the portfolio. This work can be compared with the student work done in 2009 by using tools for peer assessment as described above. Our impressions as teachers for both courses are:

The multiple-choice tests are in general more advanced, and more tools were used in 2009. The students’ skills for making multiple-choice tests are higher in 2009. The use of the rubrics for visible and clear goals seems to be the most important tool here.

The students spend more time in 2009 than in 2008. The statement is based on the working list for what to do in this student work. They had to do more work in 2009 by making a discussion with personal feedback for three other students. Visual and personal feedbacks to others demand a deeper understanding and more skills of the learning material than a simple report to a teacher without any consequences.

The teacher had to focus on making adequate rubrics for the student work, and he had to prepare the student for making the peer assessment.

Most of the students reported that they spend more time with the assignments knowing that other students in the class would be reading and evaluating their work instead of just the teacher. They were also more concerned whether the content of the assignment was correct. A few of the students were skeptical to the method of peer assessment since the assignment could be used as part of the final portfolio. They expressed doubts like If I come up with a lot of good ideas and put a lot of work into my assignment, other students may copy my good work and get better marks without having worked for it. Therefore, I will reserve most of my good ideas and hard work for the final portfolio.

Using the method of student feedback, the instructor would spend less time giving feedbacks on each individual assignment. Some of the students felt concerned about not getting the same amount of feedback and comments from the teacher as they usually would. It was important since the teacher was the one who would be grading their final portfolio. Others, however, indicated that they were happy to get several evaluations and comments instead of only one evaluation from the teacher.

All the students agreed on the fact that the process of giving feedback to the other students in the group consumed a lot more time than they otherwise would have spent on this particular assignment. Mainly, the extra time was spent on consulting the curriculum literature to ensure that the comments they gave in the evaluations to the other students were correct, and also to check and see if the answers given by the other students in their assignments were correct. The overall result was more time spent studying the subject matter so that the students obtained more knowledge and better understanding of the themes in the assignment.

The common LMSs used in higher education in Norway do not have proper tools for peer assessment. In the tool for portfolio in LMS like Fronter and It's learning, there are two main roles. The student role can save student work and read feedback of the work. The teacher role has write access and can write comments directly to the student work, and can give feedback in different ways to the student work. For real peer assessment inside the portfolio, the student needs read access to all other student work, and the opportunity to give feedback to each other inside the portfolio.

For the peer assessments in our courses, we had to find alternative tools in Fronter. Our decision was to create common archive folders with sub-folders in which a group of students had write access. In the course ITL111 we selected a compound across the former group of sections to avoid friends and colleagues having to consider each other's work. In Physical Geography the whole class was divided into groups alphabetically. Among the campus students this resulted in groups where some of the students knew each other quite well.

All the students uploaded documents for review to the archive folder. For feedback, we recommended the students to create and use a discussion forum. For the peer-assessment some groups made a common forum for a united response. Alternatively, some groups chose to create multiple forums, but with clear headlines so it was visible to the recipient. The teacher control at this peer-assessment process was to review the feedback given to the students and compare the reviews given to each of them. Only two students got no feedback from other students, and the teacher had to make ordinary feedback of the student assignment. The student work could be further developed until the final submission of the portfolio.

Our alternative teaching approach for these cases was a traditional portfolio student work and with feedback from the teacher. For the students this approach is more demanding with respect to the time spent, but also a more effective and meaningful learning.

Our exploration of peer assessment in the formative feedback of themes within ITL111 Digital Competence for teachers (15 ECTS) and GEO102 Physical Geography (15 ECTS) is based on support from tools within the LMS; sets of learning based outcomes, rubrics and Six Thinking Hats.

Net based peer assessment can be used within a LMS even though most LMS do not have specific tools for peer assessment. We used Fronter and chose to establish regular archives for publishing the student assignments. Access to these archives was limited to the members of each student group and the teacher. The students gave their peer assessment by using the discussion forum.

In courses with many students, the method with peer assessment will provide opportunities for an increased number of feedback responses on student assignments. In turn, this will provide an improved learning outcome for the students.

The overall effect is an improved quality of the student assignments and a deeper learning. The best results were registered with the use of rubrics where the students were presented with clearly defined criteria for expected performance on a sample of different themes within the course. In order to perform the peer review, the students had to acquire the basic knowledge of the various themes. In addition, seeing how others solved the assignment provides the student with reflections on the themes that could improve the student's own final portfolio.

We also confirm earlier research that some of the students have resistance against giving marks in peer assessment even in formative feedbacks.

Assessment is for Learning (AILF). Glossary. Retrieved February 15, 2010, from http://www.ltscotland.org.uk/assess/index.asp.

Askheim, G. A. & Grenness, T (2008). Kvalitative metoder for markedsføring og organisasjonsfag. Oslo: Universitetsforlaget.

ASSESS-2010. Prosjektwiki for ASSESS-2010. Retrieved February 15, 2010, from http://assess2010.wikispaces.com/.

Biggs, J. & Tang, C. (2007). Teaching for Quality Learning at University. (3. Edition). Berkshire, UK: Open University Press, McGraw – Hill Education.

Bound, D & Holmes, H. (1995). Self and peer marking in a large technical subject. In D. Bound (ed.): Enhancing learning through self assessment. London: Kogan Page

Brown, G., Bull, J & Pendlebury, M. (1997). Assessing student learning in higher educations. London: Routledge.

Carmean, C. & Haefner, J. (2002). Mind over Matter: Transforming Course Management Systems into Effective Learning Environments. Retrieved May 3, 2010 from http://net.educause.edu/ir/library/pdf/ERM0261.pdf

De Bono, E. Six Thinking Hats. Retrieved February 15, 2010, from http://www.debonothinkingsystems.com/tools/6hats.htm http://www.mindtools.com/pages/article/newTED_07.htm http://en.wikipedia.org/wiki/Six_Thinking_Hats http://www.debonogroup.com/six_thinking_hats.php.

Dysthe, O. & Engelsen, K. S. (2009). Mapper som lærings- og vurderingsform. Retrieved February 15, 2010 from http://www.ituarkiv.no/Emnekategori/1083650082.59/1083574061.39.1.html

Ekker, S (2009). ASSESS-2010, Pilot – Naturgeografi. Retrieved February 15, 2010 from http://assess2010.wikispaces.com/Pilot+-+Naturgeografi

Falchikov, N (2001) Learning together: Peer tutoring in higher education. London: Routledge Falmer.

Fjørtoft, H. (2009). Effektiv planlegging og vurdering, rubrikker og andre verktøy for lærere. Bergen: Fagbokforlaget and Landslaget for norskundervisning.

Havnes, A. (2005). Medstudentvurdering som læring – erfaringer fra sykepleierutdanningen. Retrieved February 15, 2010 from http://www.hio.no/Enheter/Pedagogisk-utviklingssenter/Arkiv/Tidligere-sider/FoU-prosjekter/Tverrfaglig-forskningssamarbeid-om-peer-learning/Medstudentvurdering-som-laering-erfaringer-fra-sykepleierutdanningen

Liu, N. & Carless, D. (2006). Peer feedback: The learning element of peer assessment. In Teaching in Higher Education Vol. 11, no 3, July 2006, pp 279-290. Retrieved February 16, 2010, from http://web.edu.hku.hk/staff/dcarless/Liu&Carless2006.pdf

Munkvold, R. & Nordseth, H. (2009). ASSESS-2010, Pilot – IKT for lærere. Retrieved February 15, 2010, from http://assess2010.wikispaces.com/Pilot+-+IKT+for+l%C3%A6rere

Overbaugh, R. C. & Schultz, L. Bloom's Taxonomy, new version. Retrieved February 15, 2010 from http://www.odu.edu/educ/roverbau/Bloom/blooms_taxonomy.htm

Walker, J. (2004). Å lære å gi og motta konstruktiv kritikk gjennom medstudentvurdering. Retrieved February 15, 2010 from http://jilltxt.net/txt/medstudentvurdering.html